Talos is an immutable operating system designed to only run Kubernetes. The advantage of Talos is an out-of-the-box Kubernetes install, as well as a smaller attack surface, and easier maintenance.

In this article we’ll take a look at how to bootstrap and upgrade a multi-node Talos cluster running in VMs on a Proxmox Virtual Environment 8.2 cluster. We’ll be using OpenTofu/Terraform to do this in a declaratively following IaC principles.

This article can be treated as a continuation of my previous scribbles on Bootstrapping k3s with Cilium and running Kubernetes on Proxmox using Debian 12. The previous articles will hopefully form a basis with more nerdy details if my explanations fall short here.

The initial idea was inspired by this post written by Olav which is more straight to the point. I’ve added my own twists, tweaks and thoughts on his initial configuration which I hope warrants this article.

Overview#

The Kubernetes-Tofu recipe we’ll be bootstrapping today is sweetened using Cilium’s eBPF enhanced honey, subtly seasoned with the Sealed Secrets sauce, and baked at 256° GiB with volumes provisioned by Proxmox CSI Plugin. As an optional garnish we’ll top it with Intel iGPU drivers for Quick Sync Video support.

Key ingredients for this Tofu dish will be the bpg/proxmox and siderolabs/talos providers. To complete the optional bootstrapping we’ll also utilise the Mastercard/rastapi and hashicorp/kubernetes providers.

At the end we’ll have three servings of Talos-Kubernetes control-plane node and one worker node all clustered together. Adjust the recipe according to your needs.

We start by exploring the Talos Linux Image Factory to generate an image with the system components we need. Next we’ll create a virtual machine in Proxmox and use Talos Machine Configuration to bootstrap our Kubernetes cluster.

After kickstarting Kubernetes we take a look at how we can use the provided configuration to upgrade our cluster in-place, before discussing potential improvements to this approach.

Folder structure#

Seeing as this is a fairly long article it might be beneficial to have an overview of the folder structure that will be used for all the resources

🗃️

├── 📂 talos # Talos configuration

│ ├── 📁 image # Image schematic

│ ├── 📁 inline-manifests # Bootstrapping manifests (Cilium)

│ └── 📁 machine-config # Machine config templates

└── 📂 bootstrap # Optional bootstrap

├── 📂 sealed-secrets # Secrets management

│ └── 📁 certificate # Encryption keys

├── 📂 proxmox-csi-plugin # CSI Driver

└── 📂 volumes # Volume provisioning

├── 📁 persistent-volume # Kubernetes PVs

└── 📁 proxmox-volume # Proxmox disk imagesFor a full overview of all the files check out the Summary or the repository for this article here.

Hardware#

The hardware used in this article are two Intel N100 based mini-PCs affectionately named euclid and cantor, and a third Intel i3-N305 based machine called abel, all with 32 GB RAM running clustered Proxmox VE 8.2.

---

title: Overview of the Proxmox cluster used in this article

---

flowchart TB

subgraph cluster["Proxmox Cluster"]

subgraph euclid["euclid"]

vm01["VM: ctrl-01"]

end

subgraph abel["abel"]

vm00["VM: ctrl-00"]

vm10["VM: work-00"]

end

subgraph cantor["cantor"]

vm02["VM: ctrl-02"]

end

end

euclid --- abel --- cantor --- euclid

Though the cluster would benefit from Ceph, we won’t touch upon that here.

Talos Module#

Once Talos is up and running it must be configured to run and cluster properly.

It’s possible to use talosctl to do this manually,

but we’ll leverage the Talos-provider to do this for us based on their

own example.

To keep all the configuration in one place and avoid repeating ourselves, we can create a cluster variable to hold the values shared by the whole cluster

variable "cluster" {

description = "Cluster configuration"

type = object({

name = string

endpoint = string

gateway = string

talos_version = string

proxmox_cluster = string

})

}The cluster name is set via the name-variable, while the endpoint-variable signifies the main Kubernetes API endpoint. In a highly available setup this should be configured to use all control plane nodes using e.g. a load balancer. For more information on configuring a HA endpoint read the Talos documentation on “Decide the Kubernetes Endpoint”.

Sidero labs recommends setting the optional talos_version-variable to avoid unexpected behaviour when upgrading, and we will follow that advice here.

We’ll use the gateway-variable to set the default network gateway for all nodes.

Jumping ahead a bit,

the proxmox_cluster-variable will be used to configure the topology.kubernetes.io/region label to be used by our

chosen CSI-controller.

The values we will use in this article is given below. Note that we’ve skipped HA configuration and set the Kubernetes API endpoint to simply be the IP of the first control-plane node.

cluster = {

name = "talos"

endpoint = "192.168.1.100"

gateway = "192.168.1.1"

talos_version = "v1.7"

proxmox_cluster = "homelab"

}To allow for easy customisation of the nodes we’ll use a map that can be looped through to create and configure the required VMs

variable "nodes" {

description = "Configuration for cluster nodes"

type = map(object({

host_node = string

machine_type = string

datastore_id = optional(string, "local-zfs")

ip = string

mac_address = string

vm_id = number

cpu = number

ram_dedicated = number

update = optional(bool, false)

igpu = optional(bool, false)

}))

}In this map we’re using the hostname as a key and the node configuration as values.

The host_node-variable indicates which Proxmox VE hypervisor node the VM should run on,

whereas the machine_type-variable decides the node-type

— either controlplane or worker.

The remaining variables are for VM-configuration,

the non-obvious ones are perhaps datastore-id-variable

— which is used to control where the VM disk should be stored,

as well as the update-flag

— which selects which image should be used,

and the igpu-flag that can be used to enable passthrough of the host iGPU.

The configuration for a four node cluster with three control-plane nodes and one worker looks like

nodes = {

"ctrl-00" = {

machine_type = "controlplane"

ip = "192.168.1.100"

mac_address = "BC:24:11:2E:C8:00"

host_node = "abel"

vm_id = 800

cpu = 8

ram_dedicated = 4096

}

"ctrl-01" = {

host_node = "euclid"

machine_type = "controlplane"

ip = "192.168.1.101"

mac_address = "BC:24:11:2E:C8:01"

vm_id = 801

cpu = 4

ram_dedicated = 4096

igpu = true

}

"ctrl-02" = {

host_node = "cantor"

machine_type = "controlplane"

ip = "192.168.1.102"

mac_address = "BC:24:11:2E:C8:02"

vm_id = 802

cpu = 4

ram_dedicated = 4096

}

"work-00" = {

host_node = "abel"

machine_type = "worker"

ip = "192.168.1.110"

mac_address = "BC:24:11:2E:08:00"

vm_id = 810

cpu = 8

ram_dedicated = 4096

igpu = true

}

}Note that we’ve arbitrarily enabled iPGU passthrough on the ctrl-01 and work-00 nodes hosted on euclid and abel

respectively.

Image Factory#

By definition, an immutable OS disallows changing components after it’s been installed. To not include everything you might need in one image, Sidero Labs — the people behind Talos, have created Talos Linux Image Factory to let you customise which packages are included.

Talos Linux Image Factory enables us to create a Talos image with the configuration we want either through point and click, or by POSTing a YAML/JSON schematic to https://factory.talos.dev/schematics to get back a unique schematic ID.

In our example we want to install QEMU guest agent to report VM status to the Proxmox hypervisor, including Intel microcode and iGPU drivers to be able to take full advantage of Quick Sync Video on Kubernetes.

The schematic for this configuration is

# tofu/talos/image/schematic.yaml

customization:

systemExtensions:

officialExtensions:

- siderolabs/i915-ucode

- siderolabs/intel-ucode

- siderolabs/qemu-guest-agentwhich yields the schematic ID

{

"id": "dcac6b92c17d1d8947a0cee5e0e6b6904089aa878c70d66196bb1138dbd05d1a"

}when POSTed to https://factory.talos.dev/schematics.

Combining our wanted schematic_id, version, platform, and architecture we can use

https://factory.talos.dev/image/<schematid_id>/<version>/<platform>-<architecture>.raw.gzas a template to craft a URL to download the requested image.

A simplified Tofu recipe to automate the process of downloading the Talos image to a Proxmox host looks like

# tofu/simplified/image.tf

locals {

factory_url = "https://factory.talos.dev"

platform = "nocloud"

arch = "amd64"

version = "v1.7.5"

schematic = file("${path.module}/image/schematic.yaml")

schematic_id = jsondecode(data.http.schematic_id.response_body)["id"]

image_id = "${local.schematic_id}_${local.version}"

}

data "http" "schematic_id" {

url = "${local.factory_url}/schematics"

method = "POST"

request_body = local.schematic

}

resource "proxmox_virtual_environment_download_file" "this" {

node_name = "node_name"

content_type = "iso"

datastore_id = "local"

decompression_algorithm = "gz"

overwrite = false

url = "${local.factory_url}/image/${local.schematic_id}/${local.version}/${local.platform}-${local.arch}.raw.gz"

file_name = "talos-${local.schematic_id}-${local.version}-${local.platform}-${local.arch}.img"

}Iterating on the above configuration we can craft the following recipe to allow for changing the image incrementally across our cluster

# tofu/talos/image.tf

locals {

version = var.image.version

schematic = var.image.schematic

schematic_id = jsondecode(data.http.schematic_id.response_body)["id"]

image_id = "${local.schematic_id}_${local.version}"

update_version = coalesce(var.image.update_version, var.image.version)

update_schematic = coalesce(var.image.update_schematic, var.image.schematic)

update_schematic_id = jsondecode(data.http.updated_schematic_id.response_body)["id"]

update_image_id = "${local.update_schematic_id}_${local.update_version}"

}

data "http" "schematic_id" {

url = "${var.image.factory_url}/schematics"

method = "POST"

request_body = local.schematic

}

data "http" "updated_schematic_id" {

url = "${var.image.factory_url}/schematics"

method = "POST"

request_body = local.update_schematic

}

resource "proxmox_virtual_environment_download_file" "this" {

for_each = toset(distinct([for k, v in var.nodes : "${v.host_node}_${v.update == true ? local.update_image_id : local.image_id}"]))

node_name = split("_", each.key)[0]

content_type = "iso"

datastore_id = var.image.proxmox_datastore

file_name = "talos-${split("_",each.key)[1]}-${split("_", each.key)[2]}-${var.image.platform}-${var.image.arch}.img"

url = "${var.image.factory_url}/image/${split("_", each.key)[1]}/${split("_", each.key)[2]}/${var.image.platform}-${var.image.arch}.raw.gz"

decompression_algorithm = "gz"

overwrite = false

}with the following variable definition

variable "image" {

description = "Talos image configuration"

type = object({

factory_url = optional(string, "https://factory.talos.dev")

schematic = string

version = string

update_schematic = optional(string)

update_version = optional(string)

arch = optional(string, "amd64")

platform = optional(string, "nocloud")

proxmox_datastore = optional(string, "local")

})

}Here we’ve keyed the

proxmox_virtual_environment_download_file

resource to be <host>_<schematic_id>_<version> which will trigger a recreation of the connected VM only when the node

update variable is changed.

At the time of writing, the v0.6.0-alpha.1 pre-release of the Talos provider has built-in support for generating schematic IDs, so this step can probably be simplified in the future.

For more details on how to customize Talos images check the Talos Image Factory GitHub repository for documentation.

If you’re partial to NVIDIA GPU acceleration, Talos has instructions on how to enable this in their documentation, so there’s no need to cover it here.

Client Configuration#

The first step in setting up Talos machines is to create machine secrets and client configuration shared by all nodes.

The talos_machine_secrets resource generates certificates to be shared between the nodes for security, the only optional arguments is talos_version.

resource "talos_machine_secrets" "this" {

talos_version = var.cluster.talos_version

}Next we generate talos_client_configuration where we set the cluster_name and add machine secrets from the previous resource.

data "talos_client_configuration" "this" {

cluster_name = var.cluster.name

client_configuration = talos_machine_secrets.this.client_configuration

nodes = [for k, v in var.nodes : v.ip]

endpoints = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"]

}As for optional configuration we’ve added all the nodes from our input variable and only the controlplane nodes as

endpoints.

Read the talosctl documentation for the difference between

nodes and

endpoints.

Machine Configuration#

With the client configuration in place we next need to prepare the machine configuration for the Talos nodes.

We’ve prepared separate machine-config templates for the control-plane and worker nodes.

The worker machine config simply contains the node hostname,

along with

the well-known

topology.kubernetes.io labels

which will be used by the Proxmox CSI plugin later

# tofu/talos/machine-config/worker.yaml.tftpl

machine:

network:

hostname: ${hostname}

nodeLabels:

topology.kubernetes.io/region: ${cluster_name}

topology.kubernetes.io/zone: ${node_name}If you’re going for a high availability setup where the nodes can move around, you should look into either the Talos or Proxmox Cloud Controller Managers to set the topology labels dynamically based on the node location.

The control-plane machine configuration starts off similar to the worker configuration, but we’re adding cluster configuration to allow scheduling on the control-plane nodes, as well as disabling the default kube-proxy which we’ll replace by the Cilium CNI using an inline bootstrap manifest.

# tofu/talos/machine-config/control-plane.yaml.tftpl

machine:

network:

hostname: ${hostname}

nodeLabels:

topology.kubernetes.io/region: ${cluster_name}

topology.kubernetes.io/zone: ${node_name}

cluster:

allowSchedulingOnControlPlanes: true

network:

cni:

name: none

proxy:

disabled: true

# Optional Gateway API CRDs

extraManifests:

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_gatewayclasses.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/experimental/gateway.networking.k8s.io_gateways.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_httproutes.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_referencegrants.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_grpcroutes.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/experimental/gateway.networking.k8s.io_tlsroutes.yaml

inlineManifests:

- name: cilium-values

contents: |

---

apiVersion: v1

kind: ConfigMap

metadata:

name: cilium-values

namespace: kube-system

data:

values.yaml: |-

${indent(10, cilium_values)}

- name: cilium-bootstrap

contents: |

${indent(6, cilium_install)}You can also add extra manifests to be applied during the bootstrap, e.g. the Gateway API CRDs if you plan to use the Gateway API.

The different talos_machine_configuration for each node is prepared using the following recipe

data "talos_machine_configuration" "this" {

for_each = var.nodes

cluster_name = var.cluster.name

cluster_endpoint = var.cluster.endpoint

talos_version = var.cluster.talos_version

machine_type = each.value.machine_type

machine_secrets = talos_machine_secrets.this.machine_secrets

config_patches = each.value.machine_type == "controlplane" ? [

templatefile("${path.module}/machine-config/control-plane.yaml.tftpl", {

hostname = each.key

node_name = each.value.host_node

cluster_name = var.cluster.proxmox_cluster

cilium_values = var.cilium.values

cilium_install = var.cilium.install

})

] : [

templatefile("${path.module}/machine-config/worker.yaml.tftpl", {

hostname = each.key

node_name = each.value.host_node

cluster_name = var.cluster.proxmox_cluster

})

]

}Cilium Bootstrap#

The cilium-bootstrap inlineManifest is modified from the Talos documentation example

on deploying Cilium CNI using a job

to use values passed from the cilium-values inlineManifest ConfigMap.

This allows us to easily bootstrap Cilium using a values.yaml file we can reuse later.

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cilium-install

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: cilium-install

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cilium-install

namespace: kube-system

---

apiVersion: batch/v1

kind: Job

metadata:

name: cilium-install

namespace: kube-system

spec:

backoffLimit: 10

template:

metadata:

labels:

app: cilium-install

spec:

restartPolicy: OnFailure

tolerations:

- operator: Exists

- effect: NoSchedule

operator: Exists

- effect: NoExecute

operator: Exists

- effect: PreferNoSchedule

operator: Exists

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoExecute

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: PreferNoSchedule

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

serviceAccountName: cilium-install

hostNetwork: true

containers:

- name: cilium-install

image: quay.io/cilium/cilium-cli-ci:latest

env:

- name: KUBERNETES_SERVICE_HOST

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: KUBERNETES_SERVICE_PORT

value: "6443"

volumeMounts:

- name: values

mountPath: /root/app/values.yaml

subPath: values.yaml

command:

- cilium

- install

- --version=v1.16.0

- --set

- kubeProxyReplacement=true

- --values

- /root/app/values.yaml

volumes:

- name: values

configMap:

name: cilium-valuesA basic values.yaml configuration that works with Talos would be the following as suggested in the Talos documentation

kubeProxyReplacement: true

# Talos specific

# https://www.talos.dev/latest/kubernetes-guides/configuration/kubeprism/

k8sServiceHost: localhost

k8sServicePort: 7445

securityContext:

capabilities:

ciliumAgent: [ CHOWN,KILL,NET_ADMIN,NET_RAW,IPC_LOCK,SYS_ADMIN,SYS_RESOURCE,DAC_OVERRIDE,FOWNER,SETGID,SETUID ]

cleanCiliumState: [ NET_ADMIN,SYS_ADMIN,SYS_RESOURCE ]

cgroup:

autoMount:

enabled: false

hostRoot: /sys/fs/cgroup

# https://docs.cilium.io/en/stable/network/concepts/ipam/

ipam:

mode: kubernetesWe can later expand on this configuration by optionally enabling L2 Announcements, IngressController, Gateway API, and/or Hubble as we’ve done in the summary section.

If you’re interested in learning more about the capabilities of Cilium, I’ve written about ARP, L2 announcements, and LB IPAM in a previous article about Migrating from MetalLB to Cilium. I’ve also authored an article about using the Cilium Gateway API implementation as a replacement for the Ingress API.

Virtual Machines#

Before we can apply the machine configuration we need to create the VMs to perform this action on.

If you’re new to Proxmox and virtual machines I’ve covered some of the configuration choices in my previous Kubernetes on Proxmox article where I also try to explain PCI-passthrough.

The proxmox_virtual_environment_vm

recipe we’ll be using is pretty straight-forward,

expect the conditional boot-disk file_id based on if we want an

updated image or not,

and the dynamic hostpci block for conditional PCI-passthrough.

# tofu/talos/virtual-machines.tf

resource "proxmox_virtual_environment_vm" "this" {

for_each = var.nodes

node_name = each.value.host_node

name = each.key

description = each.value.machine_type == "controlplane" ? "Talos Control Plane" : "Talos Worker"

tags = each.value.machine_type == "controlplane" ? ["k8s", "control-plane"] : ["k8s", "worker"]

on_boot = true

vm_id = each.value.vm_id

machine = "q35"

scsi_hardware = "virtio-scsi-single"

bios = "seabios"

agent {

enabled = true

}

cpu {

cores = each.value.cpu

type = "host"

}

memory {

dedicated = each.value.ram_dedicated

}

network_device {

bridge = "vmbr0"

mac_address = each.value.mac_address

}

disk {

datastore_id = each.value.datastore_id

interface = "scsi0"

iothread = true

cache = "writethrough"

discard = "on"

ssd = true

file_format = "raw"

size = 20

file_id = proxmox_virtual_environment_download_file.this["${each.value.host_node}_${each.value.update == true ? local.update_image_id : local.image_id}"].id

}

boot_order = ["scsi0"]

operating_system {

type = "l26" # Linux Kernel 2.6 - 6.X.

}

initialization {

datastore_id = each.value.datastore_id

ip_config {

ipv4 {

address = "${each.value.ip}/24"

gateway = var.cluster.gateway

}

}

}

dynamic "hostpci" {

for_each = each.value.igpu ? [1] : []

content {

# Passthrough iGPU

device = "hostpci0"

mapping = "iGPU"

pcie = true

rombar = true

xvga = false

}

}

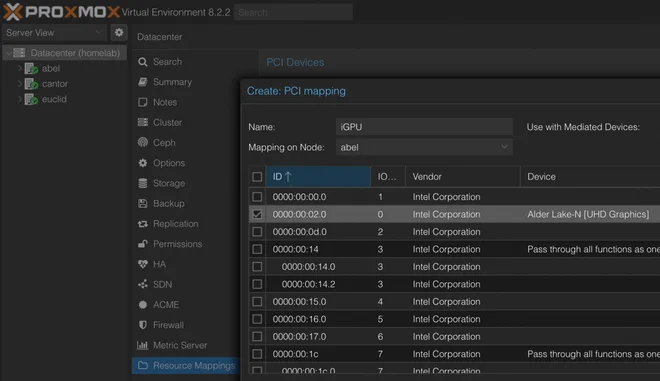

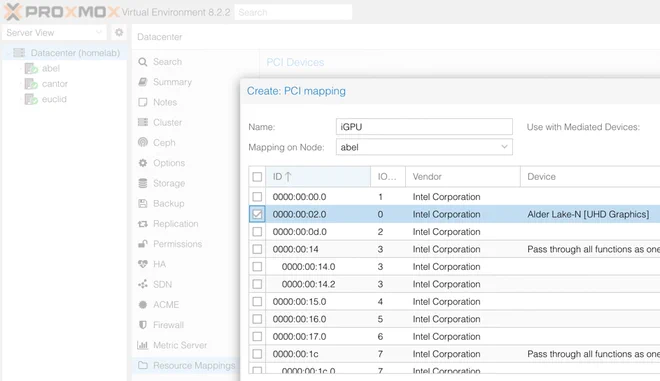

}Note that the iGPU has to be mapped in Proxmox first. This can be done under Datacenter > Resource Mappings > Add. Go through all the physical nodes and manually map the applicable iGPU for each of them as shown below.

Talos Bootstrap#

With the VMs booted up. the Talos machine configuration can be applied using the aptly named talos_machine_configuration_apply resource

resource "talos_machine_configuration_apply" "this" {

depends_on = [proxmox_virtual_environment_vm.this]

for_each = var.nodes

node = each.value.ip

client_configuration = talos_machine_secrets.this.client_configuration

machine_configuration_input = data.talos_machine_configuration.this[each.key].machine_configuration

lifecycle {

replace_triggered_by = [proxmox_virtual_environment_vm.this[each.key]]

}

}which should depend on the VMs being up and running. The configuration should also be re-applied on changed VMs, e.g. when the boot image is updated to facilitate an upgrade.

Finally, we can bootstrap the cluster using a talos_machine_bootstrap resource

resource "talos_machine_bootstrap" "this" {

node = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"][0]

endpoint = var.cluster.endpoint

client_configuration = talos_machine_secrets.this.client_configuration

}Note that we’ve arbitrarily picked the ip of the first control-plane node here, but also supplied the (optional) potentially load-balanced cluster endpoint.

To make sure that the cluster is up and running we probe the cluster health

data "talos_cluster_health" "this" {

depends_on = [

talos_machine_configuration_apply.this,

talos_machine_bootstrap.this

]

client_configuration = data.talos_client_configuration.this.client_configuration

control_plane_nodes = [for k, v in var.cluster_config.nodes : v.ip if v.machine_type == "controlplane"]

worker_nodes = [for k, v in var.cluster_config.nodes : v.ip if v.machine_type == "worker"]

endpoints = data.talos_client_configuration.this.endpoints

timeouts = {

read = "10m"

}

}When the cluster is up and healthy we can fetch the kube-config file from the talos_cluster_kubeconfig data source

data "talos_cluster_kubeconfig" "this" {

depends_on = [

talos_machine_bootstrap.this,

data.talos_cluster_health.this

]

node = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"][0]

endpoint = var.cluster.endpoint

client_configuration = talos_machine_secrets.this.client_configuration

timeouts = {

read = "1m"

}

}Here we’ve again arbitrarily picked the required node-parameter to be the first control plane node IP, but also set the optional endpoint.

Module Output#

We’ve configured the Talos module to output the client config file to be used with the talosctl tool,

and the Kubernetes config file to be used with kubectl

# tofu/talos/output.tf

output "client_configuration" {

value = data.talos_client_configuration.this

sensitive = true

}

output "kube_config" {

value = data.talos_cluster_kubeconfig.this

sensitive = true

}

output "machine_config" {

value = data.talos_machine_configuration.this

}For debug purposes we’ve also included the machine configuration, which arguably should also be marked as sensitive.

Sealed-secrets (Optional)#

Once the cluster is up and running — and with the kubeconfig-file in hand, we can start bootstrapping the cluster.

Sealed secrets promises that

[they] are safe to store in your local code repository, along with the rest of your configuration.

This makes Sealed Secrets an alternative to e.g. Secrets Store CSI Driver, and as advertised allows us to keep the secrets in the same place as the configuration.

The only gotcha with this approach is that you need to keep the decryption key. If you tear down and rebuild your cluster you thus need to inject the same encryption/decryption keys to be able to reuse the same SealedSecret objects.

To bootstrap an initial secret for this purpose we can use the following Tofu recipe

# tofu/bootstrap/sealed-secrets/config.tf

resource "kubernetes_namespace" "sealed-secrets" {

metadata {

name = "sealed-secrets"

}

}

resource "kubernetes_secret" "sealed-secrets-key" {

depends_on = [ kubernetes_namespace.sealed-secrets ]

type = "kubernetes.io/tls"

metadata {

name = "sealed-secrets-bootstrap-key"

namespace = "sealed-secrets"

labels = {

"sealedsecrets.bitnami.com/sealed-secrets-key" = "active"

}

}

data = {

"tls.crt" = var.cert.cert

"tls.key" = var.cert.key

}

}with the cert-variable defined as

# tofu/bootstrap/sealed-secrets/variables.tf

variable "cert" {

description = "Certificate for encryption/decryption"

type = object({

cert = string

key = string

})

}This will create the sealed-secrets namespace and populate it with a single secret which Sealed Secrets should pick

up.

A valid Sealed Secrets certificate-key pair can be generated using OpenSSL by running

openssl req -x509 -days 365 -nodes -newkey rsa:4096 -keyout sealed-secrets.key -out sealed-secrets.cert -subj "/CN=sealed-secret/O=sealed-secret"to be used as input.

For increased security you should rotate the certificate and re-encrypt the secrets one in a while.

Proxmox CSI Plugin (Optional)#

Since we’re running on Proxmox, a natural choice for a CSI (Container Storage Interface) driver would be utilising Proxmox.

A great alternative for this is the Proxmox CSI Plugin by Serge Logvinov to provision persistent storage for our Kubernetes cluster.

I’ve covered how to configure Proxmox CSI Plugin in Kubernetes Proxmox CSI so I’ll allow myself to be brief here.

We first need to create a CSI role in Proxmox

resource "proxmox_virtual_environment_role" "csi" {

role_id = "CSI"

privileges = [

"VM.Audit",

"VM.Config.Disk",

"Datastore.Allocate",

"Datastore.AllocateSpace",

"Datastore.Audit"

]

}and bestow that role to a kubernetes-csi user

resource "proxmox_virtual_environment_user" "kubernetes-csi" {

user_id = "kubernetes-csi@pve"

comment = "User for Proxmox CSI Plugin"

acl {

path = "/"

propagate = true

role_id = proxmox_virtual_environment_role.csi.role_id

}

}We then create a token for that user

resource "proxmox_virtual_environment_user_token" "kubernetes-csi-token" {

comment = "Token for Proxmox CSI Plugin"

token_name = "csi"

user_id = proxmox_virtual_environment_user.kubernetes-csi.user_id

privileges_separation = false

}which we input in a privileged namespace (which is required according to the Proxmox CSI Plugin documentation)

resource "kubernetes_namespace" "csi-proxmox" {

metadata {

name = "csi-proxmox"

labels = {

"pod-security.kubernetes.io/enforce" = "privileged"

"pod-security.kubernetes.io/audit" = "baseline"

"pod-security.kubernetes.io/warn" = "baseline"

}

}

}as a secret containing the Proxmox CSI Plugin configuration

resource "kubernetes_secret" "proxmox-csi-plugin" {

metadata {

name = "proxmox-csi-plugin"

namespace = kubernetes_namespace.csi-proxmox.id

}

data = {

"config.yaml" = <<EOF

clusters:

- url: "${var.proxmox.endpoint}/api2/json"

insecure: ${var.proxmox.insecure}

token_id: "${proxmox_virtual_environment_user_token.kubernetes-csi-token.id}"

token_secret: "${element(split("=", proxmox_virtual_environment_user_token.kubernetes-csi-token.value), length(split("=", proxmox_virtual_environment_user_token.kubernetes-csi-token.value)) - 1)}"

region: ${var.proxmox.cluster_name}

EOF

}

}Provision Volumes (Optional)#

With Proxmox CSI Plugin we can provision volumes using PersistentVolumeClaims referencing a StorageClass which uses

the csi.proxmox.sinextra.dev provisioner, e.g.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-test

namespace: proxmox-csi-test

spec:

storageClassName: proxmox-csi

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gifor the StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: proxmox-csi

provisioner: csi.proxmox.sinextra.dev

parameters:

cache: writethrough

csi.storage.k8s.io/fstype: ext4

storage: local-zfsThis will automatically create a VM disk in Proxmox and a corresponding PersistentVolume referencing the mounted VM

disk.

The potential issue with this is that the created VM disk will be named with a random UUID

— e.g. vm-9999-pvc-96bff316-1d50-45d5-b8fa-449bb3825211,

which makes referencing it again after a cluster rebuild cumbersome.

A solution to this issue is to manually create a VM disk in Proxmox and a PersistentVolume referencing it,

which we will do here.

The Proxmox provider we’ve chosen doesn’t (yet) support creating VM disks directly, so we have to resort to the Proxmox VE REST API to use it. For this purpose we can use the Mastercard restapi provider to conjure the following OpenTofu recipe

# tofu/bootstrap/volumes/proxmox-volumes/proxmox-volume.tf

locals {

filename = "vm-${var.volume.vmid}-${var.volume.name}"

}

resource "restapi_object" "proxmox-volume" {

path = "/api2/json/nodes/${var.volume.node}/storage/${var.volume.storage}/content/"

id_attribute = "data"

force_new = [var.volume.size]

data = jsonencode({

vmid = var.volume.vmid

filename = local.filename

size = var.volume.size

format = var.volume.format

})

lifecycle {

prevent_destroy = true

}

}

output "node" {

value = var.volume.node

}

output "storage" {

value = var.volume.storage

}

output "filename" {

value = local.filename

}with the input variables defined as

# tofu/bootstrap/volumes/proxmox-volumes/variables.tf

variable "proxmox_api" {

type = object({

endpoint = string

insecure = bool

api_token = string

})

sensitive = true

}

variable "volume" {

type = object({

name = string

node = string

size = string

storage = optional(string, "local-zfs")

vmid = optional(number, 9999)

format = optional(string, "raw")

})

}On the Kubernetes side we create a matching PersistentVolume using

the Hashicorp kubernetes provider

# tofu/bootstrap/volumes/persistent-volume/config.tf

resource "kubernetes_persistent_volume" "pv" {

metadata {

name = var.volume.name

}

spec {

capacity = {

storage = var.volume.capacity

}

access_modes = var.volume.access_modes

storage_class_name = var.volume.storage_class_name

mount_options = var.volume.mount_options

volume_mode = var.volume.volume_mode

persistent_volume_source {

csi {

driver = var.volume.driver

fs_type = var.volume.fs_type

volume_handle = var.volume.volume_handle

volume_attributes = {

cache = var.volume.cache

ssd = var.volume.ssd == true ? "true" : "false"

storage = var.volume.storage

}

}

}

}

}providing the module with the following variables

# tofu/bootstrap/volumes/persistent-volume/variables.tf

variable "volume" {

description = "Volume configuration"

type = object({

name = string

capacity = string

volume_handle = string

access_modes = optional(list(string), ["ReadWriteOnce"])

storage_class_name = optional(string, "proxmox-csi")

fs_type = optional(string, "ext4")

driver = optional(string, "csi.proxmox.sinextra.dev")

volume_mode = optional(string, "Filesystem")

mount_options = optional(list(string), ["noatime"])

cache = optional(string, "writethrough")

ssd = optional(bool, true)

storage = optional(string, "local-zfs")

})

}Combining the proxmox- and persistent-volume moules we can use the output from the former to set the volume_handle in the latter to tie them together

# tofu/bootstrap/volumes/main.tf

module "proxmox-volume" {

for_each = var.volumes

source = "./proxmox-volume"

providers = {

restapi = restapi

}

proxmox_api = var.proxmox_api

volume = {

name = each.key

node = each.value.node

size = each.value.size

storage = each.value.storage

vmid = each.value.vmid

format = each.value.format

}

}

module "persistent-volume" {

for_each = var.volumes

source = "./persistent-volume"

providers = {

kubernetes = kubernetes

}

volume = {

name = each.key

capacity = each.value.size

volume_handle = "${var.proxmox_api.cluster_name}/${module.proxmox-volume[each.key].node}/${module.proxmox-volume[each.key].storage}/${module.proxmox-volume[each.key].filename}"

storage = each.value.storage

}

}The input to this aggregated volume-module takes Proxmox API details and a map of volumes as input

# tofu/bootstrap/volumes/variables.tf

variable "proxmox_api" {

type = object({

endpoint = string

insecure = bool

api_token = string

cluster_name = string

})

sensitive = true

}

variable "volumes" {

type = map(

object({

node = string

size = string

storage = optional(string, "local-zfs")

vmid = optional(number, 9999)

format = optional(string, "raw")

})

)

}To provision a 4 GB PersistentVolume backed by a Proxmox VM disk attached to e.g. the abel node you can supply

volumes = {

pv-test = {

node = "abel"

size = "4G"

}

}as input to the volume-module.

To claim this module you then reference the PV in a PersistentVolumeClaim’s volumeName field, e.g.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: lidarr-config

namespace: pvc-test

spec:

storageClassName: proxmox-csi

volumeName: pv-test

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4GSince we’re not running with distributed storage

— e.g. Ceph, GlusterFS, or Longhorn,

we have to manually specify that the pod using the PVC has to run on the same node as the

Proxmox backed volume.

We can easily do this by referencing the corresponding topology.kubernetes.io/zone label

in a pod nodeSelector, i.e.

nodeSelector:

topology.kubernetes.io/zone: abelMain Course#

Having prepared the main Talos dish and possibly a few of the side-courses,

we can start combining all the OpenTofu recipes into a fully-fledged meal cluster.

The providers backing the whole operation are

terraform {

required_providers {

talos = {

source = "siderolabs/talos"

version = "0.5.0"

}

proxmox = {

source = "bpg/proxmox"

version = "0.61.1"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.31.0"

}

restapi = {

source = "Mastercard/restapi"

version = "1.19.1"

}

}

}with details on how to connect to the Proxmox API supplied as a variable

# tofu/variables.tf

variable "proxmox" {

type = object({

name = string

cluster_name = string

endpoint = string

insecure = bool

username = string

api_token = string

})

sensitive = true

}As an example we can directly connect to one of the Proxmox nodes using the following variables

# tofu/proxmox.auto.tfvars

proxmox = {

name = "abel"

cluster_name = "homelab"

endpoint = "https://192.168.1.10:8006"

insecure = true

username = "root"

api_token = "root@pam!tofu=<UUID>"

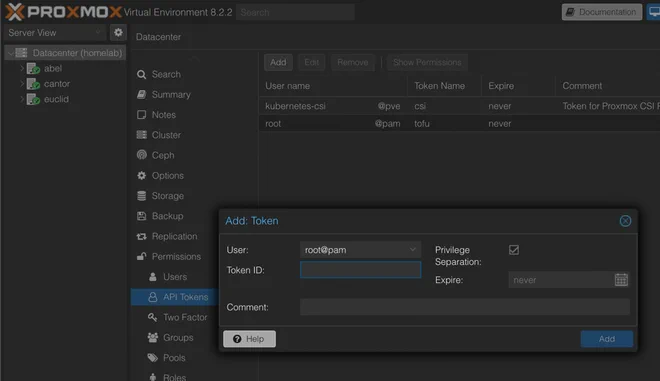

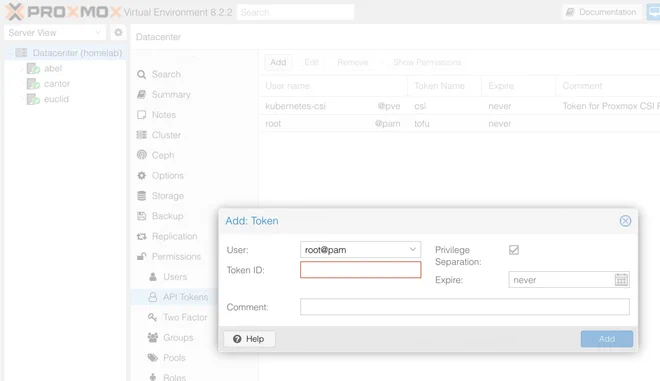

}An API token can be generated in the Datacenter > Permissions > API Tokens menu by clicking Add as shown below

The Talos provider requires no configuration, while the Proxmox provider consumes the previously defined variable

provider "proxmox" {

endpoint = var.proxmox.endpoint

insecure = var.proxmox.insecure

api_token = var.proxmox.api_token

ssh {

agent = true

username = var.proxmox.username

}

}Next, the Kubernetes provider is configured using output from the Talos module

provider "kubernetes" {

host = module.talos.kube_config.kubernetes_client_configuration.host

client_certificate = base64decode(module.talos.kube_config.kubernetes_client_configuration.client_certificate)

client_key = base64decode(module.talos.kube_config.kubernetes_client_configuration.client_key)

cluster_ca_certificate = base64decode(module.talos.kube_config.kubernetes_client_configuration.ca_certificate)

}Lastly the Rest API provider consumes the same variable as the Proxmox provider

provider "restapi" {

uri = var.proxmox.endpoint

insecure = var.proxmox.insecure

write_returns_object = true

headers = {

"Content-Type" = "application/json"

"Authorization" = "PVEAPIToken=${var.proxmox.api_token}"

}

}Following the provider configuration we can start to populate the talos module configuration

module "talos" {

source = "./talos"

providers = {

proxmox = proxmox

}

image = {

version = "v1.7.5"

schematic = file("${path.module}/talos/image/schematic.yaml")

}

cilium = {

install = file("${path.module}/talos/inline-manifests/cilium-install.yaml")

values = file("${path.module}/../kubernetes/cilium/values.yaml")

}

cluster = {

name = "talos"

endpoint = "192.168.1.100"

gateway = "192.168.1.1"

talos_version = "v1.7"

proxmox_cluster = "homelab"

}

nodes = {

"ctrl-00" = {

host_node = "abel"

machine_type = "controlplane"

ip = "192.168.1.100"

mac_address = "BC:24:11:2E:C8:00"

vm_id = 800

cpu = 8

ram_dedicated = 4096

}

"ctrl-01" = {

host_node = "euclid"

machine_type = "controlplane"

ip = "192.168.1.101"

mac_address = "BC:24:11:2E:C8:01"

vm_id = 801

cpu = 4

ram_dedicated = 4096

igpu = true

}

"ctrl-02" = {

host_node = "cantor"

machine_type = "controlplane"

ip = "192.168.1.102"

mac_address = "BC:24:11:2E:C8:02"

vm_id = 802

cpu = 4

ram_dedicated = 4096

}

"work-00" = {

host_node = "abel"

machine_type = "worker"

ip = "192.168.1.110"

mac_address = "BC:24:11:2E:08:00"

vm_id = 810

cpu = 8

ram_dedicated = 4096

igpu = true

}

}

}Here we’ve supplied the Talos image schematic in an external file previously mentioned in the Image Factory section. The Cilium install script is the same as in the Cilium Bootstrap section, and the corresponding values are picked up from an external file shown in the Summary section. The remaining cluster and nodes variables are the same as from the Talos Module section for a four-node cluster.

To output the created talos- and kube-config files along with the machine configuration we can use the following recipe

# tofu/output.tf

resource "local_file" "machine_configs" {

for_each = module.talos.machine_config

content = each.value.machine_configuration

filename = "output/talos-machine-config-${each.key}.yaml"

file_permission = "0600"

}

resource "local_file" "talos_config" {

content = module.talos.client_configuration.talos_config

filename = "output/talos-config.yaml"

file_permission = "0600"

}

resource "local_file" "kube_config" {

content = module.talos.kube_config.kubeconfig_raw

filename = "output/kube-config.yaml"

file_permission = "0600"

}

output "kube_config" {

value = module.talos.kube_config.kubeconfig_raw

sensitive = true

}

output "talos_config" {

value = module.talos.client_configuration.talos_config

sensitive = true

}This stores the variables under ./output, and makes it possible to show them by running

tofu output -raw kube_config

tofu output -raw talos_configIf you’ve opted to use the sealed_secrets module it can be configured by sending along the kubernetes provider and supplying it with the certificate you created in the Sealed Secrets section.

module "sealed_secrets" {

depends_on = [module.talos]

source = "./bootstrap/sealed-secrets"

providers = {

kubernetes = kubernetes

}

cert = {

cert = file("${path.module}/bootstrap/sealed-secrets/certificate/sealed-secrets.cert")

key = file("${path.module}/bootstrap/sealed-secrets/certificate/sealed-secrets.key")

}

}The proxmox_csi_plugin module requires the proxmox and kubernetes providers along with the proxmox variable used in the main module

module "proxmox_csi_plugin" {

depends_on = [module.talos]

source = "./bootstrap/proxmox-csi-plugin"

providers = {

proxmox = proxmox

kubernetes = kubernetes

}

proxmox = var.proxmox

}To provision storage we use the volumes module which we supply with configured restapi and kubernetes providers. We can also reuse the same proxmox variable to use the Proxmox API. The volumes are supplied as a map with the backing node and size as the only required values

module "volumes" {

depends_on = [module.proxmox_csi_plugin]

source = "./bootstrap/volumes"

providers = {

restapi = restapi

kubernetes = kubernetes

}

proxmox_api = var.proxmox

volumes = {

pv-test = {

node = "abel"

size = "4G"

}

}

}If you want to reuse previously created volumes — e.g. during a cluster rebuild, these can be imported into the Tofu state by running

tofu import 'module.volumes.module.proxmox-volume["<VOLUME_NAME>"].restapi_object.proxmox-volume' /api2/json/nodes/<NODE>/storage/<DATASTORE_ID>/content/<DATASTORE_ID>:vm-9999-<VOLUME_NAME>for the Proxmox VM disk and

tofu import 'module.volumes.module.persistent-volume["<VOLUME_NAME>"].kubernetes_persistent_volume.pv' <VOLUME_NAME>for the Kubernetes persistent volume

Kubernetes Bootstrap#

Assuming the cluster is up and running and the kubeconfig file is in the expected place you should now be able to

run kubectl get nodes and be greeted with

NAME STATUS ROLES AGE VERSION

ctrl-00 Ready control-plane 30h v1.30.0

ctrl-01 Ready control-plane 30h v1.30.0

ctrl-02 Ready control-plane 30h v1.30.0

work-00 Ready control-plane 30h v1.30.0You’re now ready to start populating your freshly baked OpenTofu-flavoured Talos Kubernetes cluster.

Popular choices to do this in a declarative manner is Flux CD and Argo CD.

I picked the latter and wrote an article on making use of Argo CD with Kustomize + Helm, which is what I’m currently using.

For inspiration, you can check out the configuration for my homelab here.

Upgrading the Cluster#

Talos has a built-in capabilities of upgrading a cluster

using the talosctl tool.

There’s unfortunately no support yet for this in the Talos provider.

To combat this shortcoming we’ve set up the Talos module such that we can successively change the image used by each node. Assume we start with the following abbreviated module configuration

| |

Using the above configuration and running kubectl get nodes -o wide should give us something similar to

NAME STATUS ROLES VERSION OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ctrl-00 Ready control-plane v1.30.0 Talos (v1.7.4) 6.6.32-talos containerd://1.7.16

ctrl-01 Ready control-plane v1.30.0 Talos (v1.7.4) 6.6.32-talos containerd://1.7.16

ctrl-02 Ready control-plane v1.30.0 Talos (v1.7.4) 6.6.32-talos containerd://1.7.16which shows all nodes running on Talos v1.7.4.

By adding updated_version = "v1.7.5" (line 3) and telling ctrl-02 to use the updated image (line 19) we can update

that node

| |

Ideally cordoning and draining node ctrl-02 before running tofu apply we should be eventually be met with

NAME STATUS ROLES VERSION OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ctrl-00 Ready control-plane v1.30.0 Talos (v1.7.4) 6.6.32-talos containerd://1.7.16

ctrl-01 Ready control-plane v1.30.0 Talos (v1.7.4) 6.6.32-talos containerd://1.7.16

ctrl-02 Ready control-plane v1.30.0 Talos (v1.7.5) 6.6.33-talos containerd://1.7.18indicating that node ctrl-02 is now running Talos v1.7.5.

Performing the same ritual for node ctrl-01

| |

we should in time be greeted with the following cluster status

NAME STATUS ROLES VERSION OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ctrl-00 Ready control-plane v1.30.0 Talos (v1.7.4) 6.6.32-talos containerd://1.7.16

ctrl-01 Ready control-plane v1.30.0 Talos (v1.7.5) 6.6.33-talos containerd://1.7.18

ctrl-02 Ready control-plane v1.30.0 Talos (v1.7.5) 6.6.33-talos containerd://1.7.18indicating that only ctrl-00 is left on version 1.7.4.

To complete the upgrade we can update the main version to be v1.7.5 (line 2),

and remove the update-flag for nodes ctrl-01 and ctrl-02.

| |

Successfully running tofu apply a third time should ultimately yield all nodes running on Talos v1.7.5 with upgraded

kernel and containerd versions.

NAME STATUS ROLES VERSION OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ctrl-00 Ready control-plane v1.30.0 Talos (v1.7.5) 6.6.33-talos containerd://1.7.18

ctrl-01 Ready control-plane v1.30.0 Talos (v1.7.5) 6.6.33-talos containerd://1.7.18

ctrl-02 Ready control-plane v1.30.0 Talos (v1.7.5) 6.6.33-talos containerd://1.7.18Potential improvements#

The above recipe is fairly complex, and I’m sure not without room for improvement.

Below are some of the improvements I’ve though about while writing this article. If you have any comments or other ideas for improvement I would love to hear from you!

Talos Linux Image Schematic ID#

The 0.6.0 release of the Talos Provider promises support for their Image Factory. An improvement would be to change the current custom code to fetch the schematic ID to use Sidero Labs’ own implementation.

The ability to use different schematics for different nodes would also be interesting, though it would add complexity to the current upgrade procedure.

Machine config#

The possibility of overriding machine config to allow for a non-homogeneous cluster would probably be a nifty feature to have, though I see no pressing need for one.

There’s also lot more that can be configured using the machine config, e.g. like Bernd Schorgers (bjw-s) has done here.

Networking#

The current implementation uses the default Proxmox network bridge. An improvement would be to create at a dedicated subnet for the Kubernetes cluster and configure firewall rules.

Serge Logvinov has done some work on this that might be interesting to check out properly.

Load balancing and IPv6 would also be interesting to implement.

Storage#

Implementing Ceph for distributed storage would be a great improvement to allow pods to not be bound to a single physical hypervisor node. It would also allow some for of fail-over in case a node should become unresponsive.

Petitioning the maintainers of the Proxmox provider used here to be able to directly create VM disks would allow us to ditch the Mastercard REST API provider in favour of fewer dependencies and possibly a more streamlined experience.

I’ve opened a GitHub issue with the maintainers of the Proxmox provider to ask for this feature.

Cluster upgrading#

I find the current approach to upgrading the cluster by destroying and recreating VMs to not be the most elegant

solution.

A better solution would be to hook into the already existing talosctl capabilities to gracefully upgrade the cluster

as suggested by this GitHub issue,

though this reply is

somewhat discouraging.

Currently, changing the cluster.talos_version variable will destroy and recreate the whole cluster,

something that not might not be wanted when upgrading from e.g. v1.7.5 to v1.8.0 when that version becomes available.

Summary#

The resources created in this article can be found in the repository hosting the code for this site at GitLab.

You can find a snapshot of my homelab IaC configuration running this setup on GitHub.

🗃️

├── 📂 kubernetes

│ └── 📂 cilium

│ ├── 📋 kustomization.yaml

│ ├── 📄 announce.yaml

│ ├── 📄 ip-pool.yaml

│ └── 📄 values.yaml

└── 📂 tofu

├── 📝 providers.tf

├── 📝 variables.tf

├── 📃 proxmox.auto.tfvars

├── 📝 main.tf

├── 📝 output.tf

├── 📂 talos

│ ├── 📝 providers.tf

│ ├── 📝 variables.tf

│ ├── 📝 image.tf

│ ├── 📝 config.tf

│ ├── 📝 virtual-machines.tf

│ ├── 📝 output.tf

│ ├── 📂 image

│ │ └── 📄 schematic.yaml

│ ├── 📂 machine-config

│ │ ├── 📋 control-plane.yaml.tftpl

│ │ └── 📋 worker.yaml.tftpl

│ └── 📂 inline-manifests

│ └── 📄 cilium-install.yaml

└── 📂 bootstrap

├── 📂 sealed-secrets

│ ├── 📝 providers.tf

│ ├── 📝 variables.tf

│ └── 📝 config.tf

├── 📂 proxmox-csi-plugin

│ ├── 📝 providers.tf

│ ├── 📝 variables.tf

│ └── 📝 config.tf

└── 📂 volumes

├── 📂 persistent-volume

│ ├── 📝 providers.tf

│ ├── 📝 variables.tf

│ └── 📝 config.tf

├── 📂 proxmox-volume

│ ├── 📝 providers.tf

│ ├── 📝 variables.tf

│ └── 📝 config.tf

├── 📝 providers.tf

├── 📝 variables.tf

└── 📝 main.tfMain Kubernetes Module#

# tofu/providers.tf

terraform {

required_providers {

talos = {

source = "siderolabs/talos"

version = "0.5.0"

}

proxmox = {

source = "bpg/proxmox"

version = "0.61.1"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.31.0"

}

restapi = {

source = "Mastercard/restapi"

version = "1.19.1"

}

}

}

provider "proxmox" {

endpoint = var.proxmox.endpoint

insecure = var.proxmox.insecure

api_token = var.proxmox.api_token

ssh {

agent = true

username = var.proxmox.username

}

}

provider "kubernetes" {

host = module.talos.kube_config.kubernetes_client_configuration.host

client_certificate = base64decode(module.talos.kube_config.kubernetes_client_configuration.client_certificate)

client_key = base64decode(module.talos.kube_config.kubernetes_client_configuration.client_key)

cluster_ca_certificate = base64decode(module.talos.kube_config.kubernetes_client_configuration.ca_certificate)

}

provider "restapi" {

uri = var.proxmox.endpoint

insecure = var.proxmox.insecure

write_returns_object = true

headers = {

"Content-Type" = "application/json"

"Authorization" = "PVEAPIToken=${var.proxmox.api_token}"

}

}# tofu/variables.tf

variable "proxmox" {

type = object({

name = string

cluster_name = string

endpoint = string

insecure = bool

username = string

api_token = string

})

sensitive = true

}# tofu/proxmox.auto.tfvars

proxmox = {

name = "abel"

cluster_name = "homelab"

endpoint = "https://192.168.1.10:8006"

insecure = true

username = "root"

api_token = "root@pam!tofu=<UUID>"

}# tofu/main.tf

module "talos" {

source = "./talos"

providers = {

proxmox = proxmox

}

image = {

version = "v1.7.5"

schematic = file("${path.module}/talos/image/schematic.yaml")

}

cilium = {

install = file("${path.module}/talos/inline-manifests/cilium-install.yaml")

values = file("${path.module}/../kubernetes/cilium/values.yaml")

}

cluster = {

name = "talos"

endpoint = "192.168.1.100"

gateway = "192.168.1.1"

talos_version = "v1.7"

proxmox_cluster = "homelab"

}

nodes = {

"ctrl-00" = {

host_node = "abel"

machine_type = "controlplane"

ip = "192.168.1.100"

mac_address = "BC:24:11:2E:C8:00"

vm_id = 800

cpu = 8

ram_dedicated = 4096

}

"ctrl-01" = {

host_node = "euclid"

machine_type = "controlplane"

ip = "192.168.1.101"

mac_address = "BC:24:11:2E:C8:01"

vm_id = 801

cpu = 4

ram_dedicated = 4096

igpu = true

}

"ctrl-02" = {

host_node = "cantor"

machine_type = "controlplane"

ip = "192.168.1.102"

mac_address = "BC:24:11:2E:C8:02"

vm_id = 802

cpu = 4

ram_dedicated = 4096

}

"work-00" = {

host_node = "abel"

machine_type = "worker"

ip = "192.168.1.110"

mac_address = "BC:24:11:2E:08:00"

vm_id = 810

cpu = 8

ram_dedicated = 4096

igpu = true

}

}

}

module "sealed_secrets" {

depends_on = [module.talos]

source = "./bootstrap/sealed-secrets"

providers = {

kubernetes = kubernetes

}

cert = {

cert = file("${path.module}/bootstrap/sealed-secrets/certificate/sealed-secrets.cert")

key = file("${path.module}/bootstrap/sealed-secrets/certificate/sealed-secrets.key")

}

}

module "proxmox_csi_plugin" {

depends_on = [module.talos]

source = "./bootstrap/proxmox-csi-plugin"

providers = {

proxmox = proxmox

kubernetes = kubernetes

}

proxmox = var.proxmox

}

module "volumes" {

depends_on = [module.proxmox_csi_plugin]

source = "./bootstrap/volumes"

providers = {

restapi = restapi

kubernetes = kubernetes

}

proxmox_api = var.proxmox

volumes = {

pv-test = {

node = "abel"

size = "4G"

}

}

}# tofu/output.tf

resource "local_file" "machine_configs" {

for_each = module.talos.machine_config

content = each.value.machine_configuration

filename = "output/talos-machine-config-${each.key}.yaml"

file_permission = "0600"

}

resource "local_file" "talos_config" {

content = module.talos.client_configuration.talos_config

filename = "output/talos-config.yaml"

file_permission = "0600"

}

resource "local_file" "kube_config" {

content = module.talos.kube_config.kubeconfig_raw

filename = "output/kube-config.yaml"

file_permission = "0600"

}

output "kube_config" {

value = module.talos.kube_config.kubeconfig_raw

sensitive = true

}

output "talos_config" {

value = module.talos.client_configuration.talos_config

sensitive = true

}Cilium#

# kubernetes/cilium/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- announce.yaml

- ip-pool.yaml

helmCharts:

- name: cilium

repo: https://helm.cilium.io

version: 1.16.1

releaseName: "cilium"

includeCRDs: true

namespace: kube-system

valuesFile: values.yaml# kubernetes/cilium/announce.yaml

apiVersion: cilium.io/v2alpha1

kind: CiliumL2AnnouncementPolicy

metadata:

name: default-l2-announcement-policy

namespace: kube-system

spec:

externalIPs: true

loadBalancerIPs: true# kubernetes/cilium/ip-pool.yaml

apiVersion: cilium.io/v2alpha1

kind: CiliumLoadBalancerIPPool

metadata:

name: ip-pool

spec:

blocks:

- start: 192.168.1.220

stop: 192.168.1.255cluster:

name: talos

id: 1

kubeProxyReplacement: true

# Talos specific

k8sServiceHost: localhost

k8sServicePort: 7445

securityContext:

capabilities:

ciliumAgent: [ CHOWN, KILL, NET_ADMIN, NET_RAW, IPC_LOCK, SYS_ADMIN, SYS_RESOURCE, DAC_OVERRIDE, FOWNER, SETGID, SETUID ]

cleanCiliumState: [ NET_ADMIN, SYS_ADMIN, SYS_RESOURCE ]

cgroup:

autoMount:

enabled: false

hostRoot: /sys/fs/cgroup

# https://docs.cilium.io/en/stable/network/concepts/ipam/

ipam:

mode: kubernetes

operator:

rollOutPods: true

resources:

limits:

cpu: 500m

memory: 256Mi

requests:

cpu: 50m

memory: 128Mi

# Roll out cilium agent pods automatically when ConfigMap is updated.

rollOutCiliumPods: true

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 200m

memory: 512Mi

#debug:

# enabled: true

# Increase rate limit when doing L2 announcements

k8sClientRateLimit:

qps: 20

burst: 100

l2announcements:

enabled: true

externalIPs:

enabled: true

enableCiliumEndpointSlice: true

loadBalancer:

# https://docs.cilium.io/en/stable/network/kubernetes/kubeproxy-free/#maglev-consistent-hashing

algorithm: maglev

gatewayAPI:

enabled: true

envoy:

securityContext:

capabilities:

keepCapNetBindService: true

envoy: [ NET_ADMIN, PERFMON, BPF ]

ingressController:

enabled: true

default: true

loadbalancerMode: shared

service:

annotations:

io.cilium/lb-ipam-ips: 192.168.1.223

hubble:

enabled: true

relay:

enabled: true

rollOutPods: true

ui:

enabled: true

rollOutPods: trueTalos Module#

# tofu/talos/providers.tf

terraform {

required_providers {

proxmox = {

source = "bpg/proxmox"

version = ">=0.60.0"

}

talos = {

source = "siderolabs/talos"

version = ">=0.5.0"

}

}

}# tofu/talos/variables.tf

variable "image" {

description = "Talos image configuration"

type = object({

factory_url = optional(string, "https://factory.talos.dev")

schematic = string

version = string

update_schematic = optional(string)

update_version = optional(string)

arch = optional(string, "amd64")

platform = optional(string, "nocloud")

proxmox_datastore = optional(string, "local")

})

}

variable "cluster" {

description = "Cluster configuration"

type = object({

name = string

endpoint = string

gateway = string

talos_version = string

proxmox_cluster = string

})

}

variable "nodes" {

description = "Configuration for cluster nodes"

type = map(object({

host_node = string

machine_type = string

datastore_id = optional(string, "local-zfs")

ip = string

mac_address = string

vm_id = number

cpu = number

ram_dedicated = number

update = optional(bool, false)

igpu = optional(bool, false)

}))

}

variable "cilium" {

description = "Cilium configuration"

type = object({

values = string

install = string

})

}# tofu/talos/image.tf

locals {

version = var.image.version

schematic = var.image.schematic

schematic_id = jsondecode(data.http.schematic_id.response_body)["id"]

image_id = "${local.schematic_id}_${local.version}"

update_version = coalesce(var.image.update_version, var.image.version)

update_schematic = coalesce(var.image.update_schematic, var.image.schematic)

update_schematic_id = jsondecode(data.http.updated_schematic_id.response_body)["id"]

update_image_id = "${local.update_schematic_id}_${local.update_version}"

}

data "http" "schematic_id" {

url = "${var.image.factory_url}/schematics"

method = "POST"

request_body = local.schematic

}

data "http" "updated_schematic_id" {

url = "${var.image.factory_url}/schematics"

method = "POST"

request_body = local.update_schematic

}

resource "proxmox_virtual_environment_download_file" "this" {

for_each = toset(distinct([for k, v in var.nodes : "${v.host_node}_${v.update == true ? local.update_image_id : local.image_id}"]))

node_name = split("_", each.key)[0]

content_type = "iso"

datastore_id = var.image.proxmox_datastore

file_name = "talos-${split("_",each.key)[1]}-${split("_", each.key)[2]}-${var.image.platform}-${var.image.arch}.img"

url = "${var.image.factory_url}/image/${split("_", each.key)[1]}/${split("_", each.key)[2]}/${var.image.platform}-${var.image.arch}.raw.gz"

decompression_algorithm = "gz"

overwrite = false

}# tofu/talos/talos-config.tf

resource "talos_machine_secrets" "this" {

talos_version = var.cluster.talos_version

}

data "talos_client_configuration" "this" {

cluster_name = var.cluster.name

client_configuration = talos_machine_secrets.this.client_configuration

nodes = [for k, v in var.nodes : v.ip]

endpoints = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"]

}

data "talos_machine_configuration" "this" {

for_each = var.nodes

cluster_name = var.cluster.name

cluster_endpoint = "https://${var.cluster.endpoint}:6443"

talos_version = var.cluster.talos_version

machine_type = each.value.machine_type

machine_secrets = talos_machine_secrets.this.machine_secrets

config_patches = each.value.machine_type == "controlplane" ? [

templatefile("${path.module}/machine-config/control-plane.yaml.tftpl", {

hostname = each.key

node_name = each.value.host_node

cluster_name = var.cluster.proxmox_cluster

cilium_values = var.cilium.values

cilium_install = var.cilium.install

})

] : [

templatefile("${path.module}/machine-config/worker.yaml.tftpl", {

hostname = each.key

node_name = each.value.host_node

cluster_name = var.cluster.proxmox_cluster

})

]

}

resource "talos_machine_configuration_apply" "this" {

depends_on = [proxmox_virtual_environment_vm.this]

for_each = var.nodes

node = each.value.ip

client_configuration = talos_machine_secrets.this.client_configuration

machine_configuration_input = data.talos_machine_configuration.this[each.key].machine_configuration

lifecycle {

# re-run config apply if vm changes

replace_triggered_by = [proxmox_virtual_environment_vm.this[each.key]]

}

}

resource "talos_machine_bootstrap" "this" {

node = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"][0]

endpoint = var.cluster.endpoint

client_configuration = talos_machine_secrets.this.client_configuration

}

data "talos_cluster_health" "this" {

depends_on = [

talos_machine_configuration_apply.this,

talos_machine_bootstrap.this

]

client_configuration = data.talos_client_configuration.this.client_configuration

control_plane_nodes = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"]

worker_nodes = [for k, v in var.nodes : v.ip if v.machine_type == "worker"]

endpoints = data.talos_client_configuration.this.endpoints

timeouts = {

read = "10m"

}

}

data "talos_cluster_kubeconfig" "this" {

depends_on = [

talos_machine_bootstrap.this,

data.talos_cluster_health.this

]

node = [for k, v in var.nodes : v.ip if v.machine_type == "controlplane"][0]

endpoint = var.cluster.endpoint

client_configuration = talos_machine_secrets.this.client_configuration

timeouts = {

read = "1m"

}

}# tofu/talos/virtual-machines.tf

resource "proxmox_virtual_environment_vm" "this" {

for_each = var.nodes

node_name = each.value.host_node

name = each.key

description = each.value.machine_type == "controlplane" ? "Talos Control Plane" : "Talos Worker"

tags = each.value.machine_type == "controlplane" ? ["k8s", "control-plane"] : ["k8s", "worker"]

on_boot = true

vm_id = each.value.vm_id

machine = "q35"

scsi_hardware = "virtio-scsi-single"

bios = "seabios"

agent {

enabled = true

}

cpu {

cores = each.value.cpu

type = "host"

}

memory {

dedicated = each.value.ram_dedicated

}

network_device {

bridge = "vmbr0"

mac_address = each.value.mac_address

}

disk {

datastore_id = each.value.datastore_id

interface = "scsi0"

iothread = true

cache = "writethrough"

discard = "on"

ssd = true

file_format = "raw"

size = 20

file_id = proxmox_virtual_environment_download_file.this["${each.value.host_node}_${each.value.update == true ? local.update_image_id : local.image_id}"].id

}

boot_order = ["scsi0"]

operating_system {

type = "l26" # Linux Kernel 2.6 - 6.X.

}

initialization {

datastore_id = each.value.datastore_id

ip_config {

ipv4 {

address = "${each.value.ip}/24"

gateway = var.cluster.gateway

}

}

}

dynamic "hostpci" {

for_each = each.value.igpu ? [1] : []

content {

# Passthrough iGPU

device = "hostpci0"

mapping = "iGPU"

pcie = true

rombar = true

xvga = false

}

}

}# tofu/talos/output.tf

output "client_configuration" {

value = data.talos_client_configuration.this

sensitive = true

}

output "kube_config" {

value = data.talos_cluster_kubeconfig.this

sensitive = true

}

output "machine_config" {

value = data.talos_machine_configuration.this

}Image Schematic#

# tofu/talos/image/schematic.yaml

customization:

systemExtensions:

officialExtensions:

- siderolabs/i915-ucode

- siderolabs/intel-ucode

- siderolabs/qemu-guest-agentMachine config#

# tofu/talos/machine-config/control-plane.yaml.tftpl

machine:

network:

hostname: ${hostname}

nodeLabels:

topology.kubernetes.io/region: ${cluster_name}

topology.kubernetes.io/zone: ${node_name}

cluster:

allowSchedulingOnControlPlanes: true

network:

cni:

name: none

proxy:

disabled: true

# Optional Gateway API CRDs

extraManifests:

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_gatewayclasses.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/experimental/gateway.networking.k8s.io_gateways.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_httproutes.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_referencegrants.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_grpcroutes.yaml

- https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/experimental/gateway.networking.k8s.io_tlsroutes.yaml

inlineManifests:

- name: cilium-values

contents: |

---

apiVersion: v1

kind: ConfigMap

metadata:

name: cilium-values

namespace: kube-system

data:

values.yaml: |-

${indent(10, cilium_values)}

- name: cilium-bootstrap

contents: |

${indent(6, cilium_install)}# tofu/talos/machine-config/worker.yaml.tftpl

machine:

network:

hostname: ${hostname}

nodeLabels:

topology.kubernetes.io/region: ${cluster_name}

topology.kubernetes.io/zone: ${node_name}Inline Manifests#

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cilium-install

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: cilium-install

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cilium-install

namespace: kube-system

---

apiVersion: batch/v1

kind: Job

metadata:

name: cilium-install

namespace: kube-system

spec:

backoffLimit: 10

template:

metadata:

labels:

app: cilium-install

spec:

restartPolicy: OnFailure

tolerations:

- operator: Exists

- effect: NoSchedule

operator: Exists

- effect: NoExecute

operator: Exists

- effect: PreferNoSchedule

operator: Exists

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoExecute

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: PreferNoSchedule

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

serviceAccountName: cilium-install

hostNetwork: true

containers:

- name: cilium-install

image: quay.io/cilium/cilium-cli-ci:latest

env:

- name: KUBERNETES_SERVICE_HOST

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: KUBERNETES_SERVICE_PORT

value: "6443"

volumeMounts:

- name: values

mountPath: /root/app/values.yaml

subPath: values.yaml

command:

- cilium

- install

- --version=v1.16.0

- --set

- kubeProxyReplacement=true

- --values

- /root/app/values.yaml

volumes:

- name: values

configMap:

name: cilium-valuesSealed Secrets Module#

# tofu/bootstrap/sealed-secrets/providers.tf

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = ">=2.31.0"

}

}

}# tofu/bootstrap/sealed-secrets/variables.tf

variable "cert" {

description = "Certificate for encryption/decryption"

type = object({

cert = string

key = string

})

}# tofu/bootstrap/sealed-secrets/config.tf

resource "kubernetes_namespace" "sealed-secrets" {

metadata {

name = "sealed-secrets"

}

}

resource "kubernetes_secret" "sealed-secrets-key" {

depends_on = [ kubernetes_namespace.sealed-secrets ]

type = "kubernetes.io/tls"

metadata {

name = "sealed-secrets-bootstrap-key"

namespace = "sealed-secrets"

labels = {

"sealedsecrets.bitnami.com/sealed-secrets-key" = "active"

}

}

data = {

"tls.crt" = var.cert.cert

"tls.key" = var.cert.key

}

}Proxmox CSI Plugin Module#

# tofu/bootstrap/proxmox-csi-plugin/providers.tf

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = ">=2.31.0"

}

proxmox = {

source = "bpg/proxmox"

version = ">=0.60.0"

}

}

}# tofu/bootstrap/proxmox-csi-plugin/variables.tf

variable "proxmox" {

type = object({

cluster_name = string

endpoint = string

insecure = bool

})

}# tofu/bootstrap/proxmox-csi-plugin/config.tf

resource "proxmox_virtual_environment_role" "csi" {

role_id = "CSI"

privileges = [

"VM.Audit",

"VM.Config.Disk",

"Datastore.Allocate",

"Datastore.AllocateSpace",

"Datastore.Audit"

]

}

resource "proxmox_virtual_environment_user" "kubernetes-csi" {

user_id = "kubernetes-csi@pve"

comment = "User for Proxmox CSI Plugin"

acl {

path = "/"

propagate = true

role_id = proxmox_virtual_environment_role.csi.role_id

}

}

resource "proxmox_virtual_environment_user_token" "kubernetes-csi-token" {

comment = "Token for Proxmox CSI Plugin"

token_name = "csi"

user_id = proxmox_virtual_environment_user.kubernetes-csi.user_id

privileges_separation = false

}

resource "kubernetes_namespace" "csi-proxmox" {

metadata {

name = "csi-proxmox"

labels = {

"pod-security.kubernetes.io/enforce" = "privileged"

"pod-security.kubernetes.io/audit" = "baseline"

"pod-security.kubernetes.io/warn" = "baseline"

}

}

}

resource "kubernetes_secret" "proxmox-csi-plugin" {

metadata {

name = "proxmox-csi-plugin"

namespace = kubernetes_namespace.csi-proxmox.id

}

data = {

"config.yaml" = <<EOF

clusters:

- url: "${var.proxmox.endpoint}/api2/json"

insecure: ${var.proxmox.insecure}

token_id: "${proxmox_virtual_environment_user_token.kubernetes-csi-token.id}"

token_secret: "${element(split("=", proxmox_virtual_environment_user_token.kubernetes-csi-token.value), length(split("=", proxmox_virtual_environment_user_token.kubernetes-csi-token.value)) - 1)}"

region: ${var.proxmox.cluster_name}

EOF

}

}Volumes Module#

# tofu/bootstrap/volumes/providers.tf

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = ">= 2.31.0"

}

restapi = {

source = "Mastercard/restapi"

version = ">= 1.19.1"

}

}

}# tofu/bootstrap/volumes/variables.tf

variable "proxmox_api" {

type = object({

endpoint = string

insecure = bool

api_token = string

cluster_name = string

})

sensitive = true

}

variable "volumes" {

type = map(

object({

node = string

size = string