The Border Gateway Protocol (BGP) is used for routing information between so-called autonomous systems (AS). A common use case for BGP is for routing traffic between Internet Service Providers (ISPs) — or in large private networks.

BGP is therefore a perfect fit in any overengineered homelab setup where you want to needlessly overcomplicate things for the sake of learning.

Motivation#

In my modest Kubernetes-based homelab, I’m running Cilium as a Container Network Interface (CNI) together with their Gateway API implementation for networking.

Cilium also handles LoadBalancer IP Address Management (LB-IPAM), and can do L2 announcements using Address Resolution Protocol (ARP) to make services both visible and reachable on the local network, something I’ve covered in a previous article.

An alternative to ARP-based L2 announcements is BGP advertisements for exposing service endpoints to the broader network.

With Cilium v1.18 graduating their BGP control plane v2, and UniFi Gateways 4.1.10 adding support for BGP, I had no more excuses to not try it out.

Personally, the biggest motivation for trying out BGP was to learn a new technology I’ve been hearing about. Though there’s anecdotal evidence over at baremetalblog that switching to BGP from ARP solves lag issues for game servers.

Resources#

Many have already covered Cilium and BGP, including Gerard Samuel, Sander Sneekes, Andess Johansson, and Raj Singh — which all cover Cilium with Ubiquiti UniFi equipment.

Other combinations are covered by e.g. baremetalblog where they use the Cilium BGP control plane v1 together with an OPNsense router, and Zak Thompson who has written an informative article where he uses MetalLB to peer with a UniFi Dream Machine Pro.

For more advanced uses of BGP, Vegard Engen has written a three-part series on BGP in Kubernetes. He starts out by configuring BGP peering using MetalLB and Calico, before creating a VPN connection to the cloud, and finally establishes eBGP peering with a VPS.

I also had some input from Sebastian Klamar in the discussions section of my homelab repository where he also mentions Cilium’s EgressGateway feature.

A great place to start implementing BGP is the official Cilium documentation on the topic. Though if you prefer learning by doing, Isovalent — the company behind Cilium, has created simple introductory Labs that cover BGP on Cilium, LB-IPAM and BGP Service Advertisement, and Advanced BGP Features.

Ubiquiti also has a brief help section on BGP for their UniFi devices available at their Help Center where BGP neighbouring with an ISP and with AWS over IPsec Site-to-Site VPN is covered.

Overview#

We will mainly be focusing on advertising LoadBalancer IPs, although Cilium can just as easily advertise Service and/or Pod CIDR ranges directly.

For networking gear, I’m using a Cloud Gateway Max currently running UniFi Cloud Gateways 4.4.7 and Network 9.5.21.

On the Kubernetes side, I’m running a Proxmox virtualised Talos cluster using Cilium v1.18.3,

which I’ve described in detail in a previous article.

The cluster consists of three control plane nodes with the usual NoSchedule control-plane taint removed,

— thus making them workers as well.

For demonstrative purposes I’m also running a single worker-only node — that doesn’t actually do that much working. Hopefully, the presence of this node will be evident in the Smoke Test section below.

The network consists of three VLANs,

192.168.1.0/24 for cluster devices,

172.20.10.0/24 for services inside the cluster,

and 10.144.12.0/24 for clients.

The Router/Gateway answers at 192.168.1.1,

— as well as 172.20.10.1 and 10.144.12.1,

while the three Talos nodes are reachable at 192.168.100, .101, and .102.

I’ve arbitrarily given the Router

the Autonomous System Number (ASN) 65100 and Cilium the

ASN 65200.

---

title: Network overview

---

flowchart TB

subgraph router["UniFi UCG Max Router"]

subgraph vlan2["VLAN 2 — Clients"]

cidr2["CIDR: 10.144.12.0/24"]

end

subgraph vlan1["VLAN 1 — Cluster"]

asn1["ASN: 65100"]

ip1["Gateway IP: 192.168.1.1"]

cidr1["CIDR: 192.168.1.0/24"]

end

subgraph vlan10["VLAN 10 — Cilium IP-Pool"]

cidr10["CIDR: 172.20.10.0/24"]

end

end

subgraph cluster["Kubernetes Cluster"]

subgraph ctrl-00["ctrl-00"]

vm00["IP: 192.168.1.100"]

end

subgraph ctrl-01["ctrl-01"]

vm01["IP: 192.168.1.101"]

end

subgraph ctrl-02["ctrl-02"]

vm02["IP: 192.168.1.102"]

end

subgraph work-00["work-00"]

vm03["IP: 192.168.1.110"]

end

end

subgraph cilium["Cilium"]

asn2["ASN: 65200"]

pool["IP-Pool CIDR: 172.20.10.0/24"]

end

vlan1 --- cluster

ctrl-02 ---work-00 --- ctrl-00 --- ctrl-01 --- ctrl-02

cluster --- cilium

Not shown in the diagram above are the default Talos Pod- and Service-CIDRa of 10.244.0.0/16 and 10.96.0.0/12, respectively.

In this article we will configure BGP peering between a UniFi router and a Kubernetes cluster using Cilium’s BGP control plane v2. We start out by configuring the router before we move on to instruct Cilium how to peer with it. We will then deploy a simple application to verify that BGP is working and perform a smoke test.

At the end we will take a look at some troubleshooting tips I picked up along the journey.

Disclaimer#

I have no formal training in networking. If you find any mistakes or have suggestions for improvement, I’d be happy to take both corrections and feedback.

The trudge and toil of transcribing my trek through technical tasks force me to think thoroughly through the topic — something I treasure and learn tremendously from. The words in this article are my own, though I can’t deny that I’ve had some assistance from probabilistic inference engines.

BGP#

RFC 4271 describes BGP as

[…] an inter-Autonomous System routing protocol.

The primary function of a BGP speaking system is to exchange network reachability information with other BGP systems.

Fundamentally, ARP and BGP solve different problems. ARP uses broadcasts to answer the question “Who has this IP address?”, whereas BGP answers the question “Where should traffic for this IP address be sent?” — typically using more efficient unicasts.

---

title: Broadcast vs Unicast

---

flowchart TB

subgraph Unicast["Unicast"]

U1(("📡")):::sender

U2(("💻️"))

U3(("💻️")):::receiver

U4(("💻️"))

U5(("💻️"))

end

U1 ~~~~ U2

U1 ===> U3

U1 ~~~~ U4

U1 ~~~~ U5

subgraph Broadcast["Broadcast"]

B1(("📡")):::sender

B2(("💻"))

B3(("💻")):::receiver

B4(("💻"))

B5(("💻"))

end

B1 ---> B2

B1 ===> B3

B1 ---> B4

B1 ---> B5

classDef sender stroke:#16a34a,stroke-width:2px;

classDef receiver stroke:#2563eb,stroke-width:2px;

A broadcast transmits data to all devices on the network, while unicast only transmits data to the intended recipient.

In essence, we can replace ARP-based L2 announcements with BGP advertisements

— accepting added complexity in exchange for greater flexibility and potentially better network performance.

Using BGP should also allow us to use externalTrafficPolicy: Local for Services

to preserve the client source IP,

as this is normally not possible without using e.g. X-Forwarded-For headers.

There are two types of BGP routing — interior BGP (iBGP) withing the same Autonomous System (same AS number), and exterior BGP (eBGP) between different Autonomous Systems (different AS numbers). The main difference is that new routes learned from an eBGP peer are re-advertised to both iBGP and eBGP peers, while routes learned from an iBGP peer are only re-advertised to eBGP peers.

In this article we will be using eBGP between two peers, a UniFi router, and a Kubernetes cluster running Cilium.

For more information about the border gateway protocol, Packetswitch covers the topic in an easily digestible way.

UniFi#

Virtual Network (Optional)#

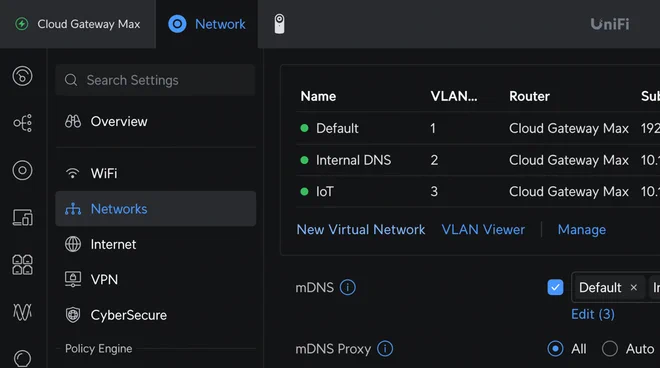

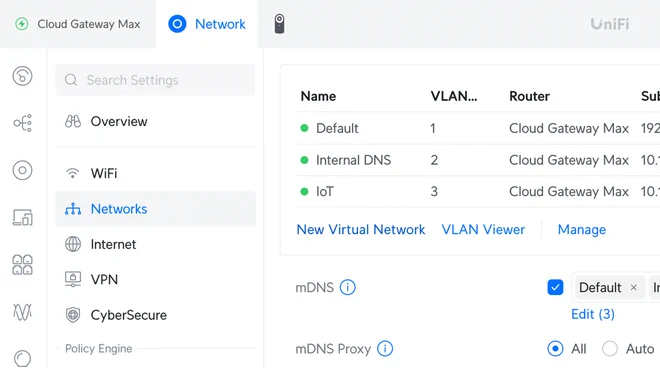

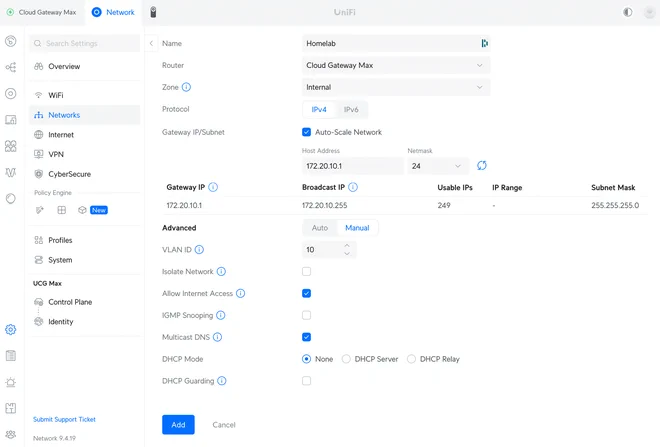

To help isolate the IPs advertised by our BGP peering network, we can create a new Virtual Network. This is optional, though I like to think of it as good practice.

A new virtual network can be created in the UniFi portal by navigating to Settings, then Networks, and finally clicking on New Virtual Network as shown in the image below.

Alternatively, you can navigate directly to https://<YOUR_GATEWAY_IP>/network/default/settings/networks/new.

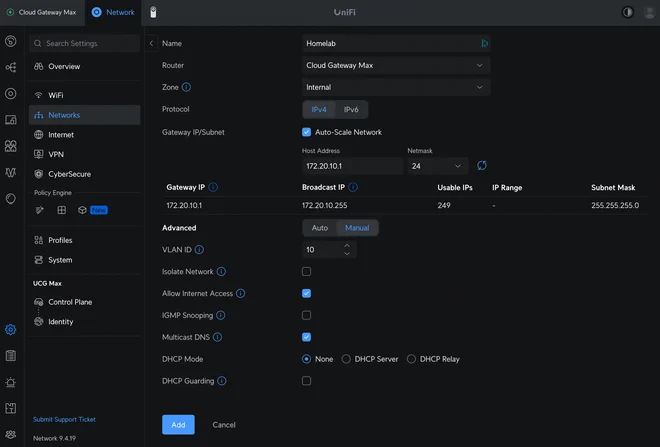

Decide on an IP range — preferably from one of the private IP ranges defined in RFC 1918, i.e. a subrange of either 10.0.0.0/8, 172.16.0.0/12, or 192.168.0.0/16. I decided to use 172.20.10.0/24 — which gives 249 usable IPs according to UniFi1, and assign it VLAN ID 10 as shown below.

I’ve also disabled DHCP as I don’t want the router to interfere with the Cilium-managed IPs.

Creating a BGP Configuration#

Before configuring BGP in UniFi, we first have to prepare a BGP configuration file in the FRR BGP format.

Pick two ASN numbers — preferably in the range 64512–65534 which is reserved for private use according

to RFC 1930.

If instead you want to try out four-octet (32 bit) ASN as defined

in RFC 6793,

you can pick a number between 4200000000 and 4294967294 inclusive according

to RFC 6996.

In the below configuration we’ve picked 65100 as the router ASN (line 1),

and declared the router-id to be 192.168.1.1 (line 3).

| |

We then configure a new peer-group called HOMELAB on line 9

— expecting it to have ASN 65200 (line 10),

before adding the cluster control plane nodes as peers on lines 13–15.

We’ve also disabled the need to explicitly define policies (line 6), basically advertising and accepting all routes.

Although the above configuration is sufficient for getting BGP peering working,

we can be more explicit by requiring eBGP policies removing the no on line 6,

and adding a simple policy allowing all ipv4 unicast routes (lines 23–29).

We explicitly activate the policy before using next-hop-self to specify that the IP address we’re using for

communication with the peer should be the target of the route as well.

To be able to re-apply inbound policies without asking neighbours to resend everything we can use

soft-reconfigutation inbound,

this can also help us debug problems by keeping a local copy of the unmodified received routes.

The policy also allows routes defined in the route-map ALLOW-ALL (lines 33–34)

— i.e. all routes, both received from (in) and advertised to (out) the activated peer-group.

Note that the number at the end of line 32 is the priority of the route-map,

not how many routes should be allowed.

| |

The extra configuration on lines 9–10 allows BGP to consider up to three multiple paths as equal for load-balancing even if their autonomous system paths (as-path) are not identical.

For better security we’ve also added

the super secret hunter2 password which the

peer neighbours will need to present to establish a BGP session.

To complicate things even further,

we can create ip prefix-lists to allow only certain CIDR ranges to be advertised and accepted.

In our final configuration, we’ve declared the HOMELAB-IN ip prefix-list on line 34 to match the Cilium CIDR range

of 172.20.10.0/24 with the le 32 (less than or equal to 32) prefix indicating that we accept any prefix length up to

and including /32 — which are individual host routes, e.g. 172.20.10.69/32.

The HOMELAB-IN ip prefix-list is then used in the RM-HOMELAB-IN route-map (line 38) to allow only routes

matching the CIDR range to be accepted from the peer (line 28).

| |

Similarly, we define a HOMELAB-OUT ip prefix-list (line 42), to be used in the RM-HOMELAB-OUT route-map

(line 46) to allow only routes matching the CIDR range to be advertised to the peer (line 29).

We’ve also explicitly added the redistribute connected statement on line 24 to advertise routes that are directly

connected to the router.

ip prefix-lists at the top of the configuration file before they are referenced,

though I experienced problems with the router discarding them when declared before the router bgp statement.Upload BGP Configuration#

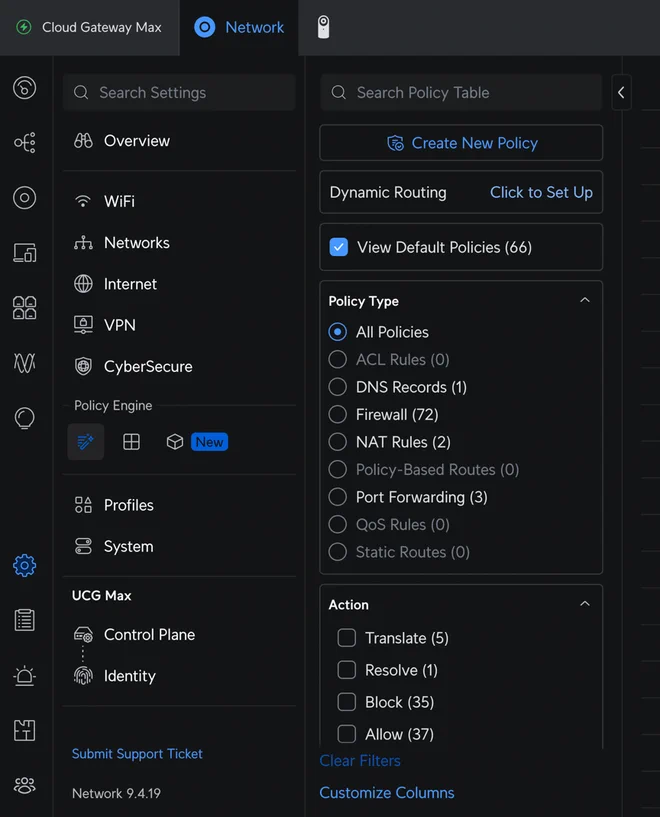

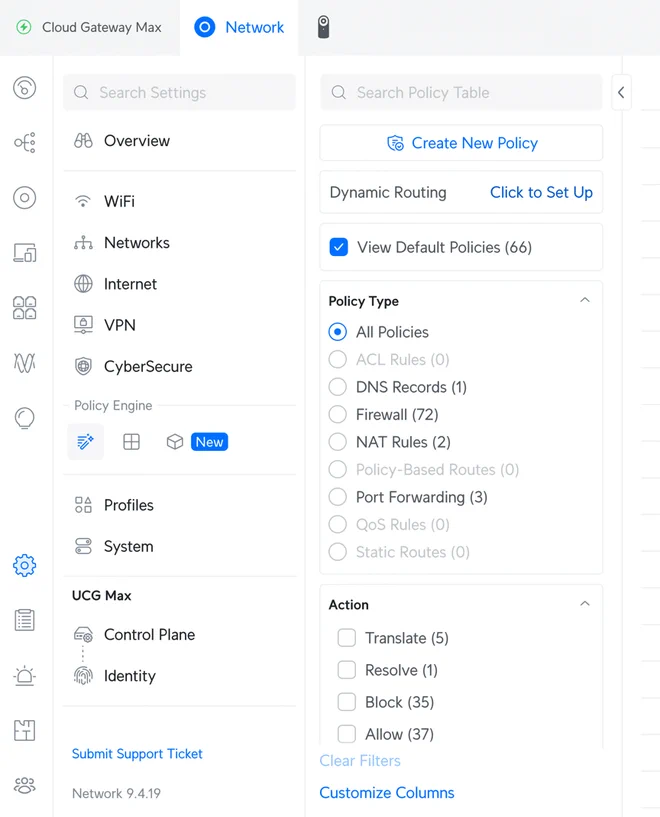

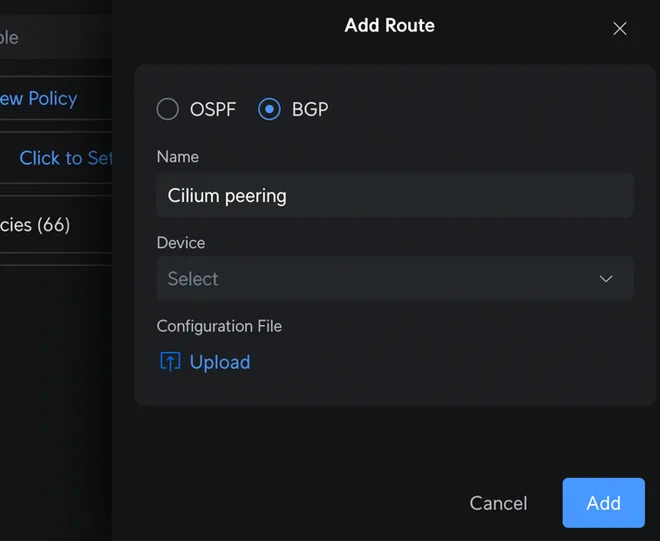

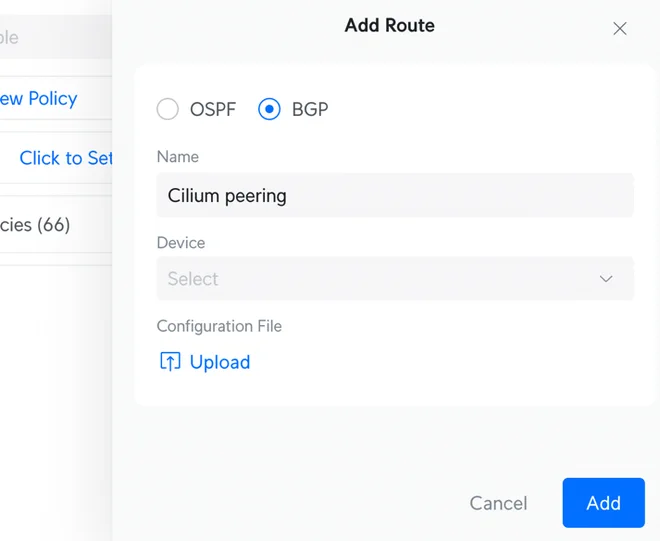

In the UniFi portal, BGP configuration can be found under Settings, then locate Policy Table under the Policy Engine section. Next, find Dynamic Routing and Click to Set Up and do just that.

Alternatively, navigate to https://<YOUR_GATEWAY_IP>/network/default/settings/policy-table/routing to find the configuration page directly.

Select the BGP radio button, give the route a memorable name, and find the device you want to peer in the dropdown menu as shown below.

Click Add to upload the BGP configuration as a .conf file.

Cilium#

With the UniFi router BGP configuration in place, we can now create Cilium resources to peer with it.

To enable the BGP control plane in Cilium,

we simply add bgpControlPlane.enabled: true to the values.yaml file

| |

Here we’ve also added a separate namespace for holding Secrets used for BGP peer authentication.

The main resources we will be dealing with are described in detail in the Cilium BGP Control Plane Configuration documentation, though the short version is:

- CiliumBGPClusterConfig — BGP configuration for one or more cluster nodes based on labels.

- CiliumBGPPeerConfig — BGP peer configuration referenced by CiliumBFPClusterConfig resources.

- CiliumBGPAdvertisement — BGP advertisement configuration referenced by CiliumBGPPeerConfig resources.

There is also a CiliumBGPNodeConfigOverride resource which can be used to override auto-generated BGP configuration for specific nodes.

An attempt at a simplified entity–relationship model of the manifests we’re about to conjure looks like the following:

---

title: Simplified Cilium BGP Control Plane v2 resources

---

erDiagram

CiliumBGPClusterConfig {

string name

string[] peers

}

CiliumBGPPeerConfig {

string name

string authSecretRef

string[] families

}

CiliumLoadBalancerIPPool {

string name

string[] blocks

}

CiliumBGPAdvertisement {

string name

string[] label

string[] advertisements

}

CiliumBGPClusterConfig }o--o{ CiliumBGPPeerConfig: "match by name"

CiliumBGPClusterConfig }o--o{ Node: "match by label"

CiliumBGPPeerConfig }o--o{ CiliumBGPAdvertisement: "match by label"

CiliumBGPAdvertisement }o--o{ Service: "match by label"

CiliumLoadBalancerIPPool }o--o{ Service: "match by label"

Starting with the CiliumBFPClusterConfig resource, we create a nodeSelector to match all control-plane nodes

(line 9). The worker node will therefore not be part of the BGP peering.

Next, we populate a bgpInstance with our arbitrarily assigned ASN for the cluster (line 12),

and add the router as a peer with both ASN (line 15) and IP address (line 16).

Note that we can define multiple bgpInstances along with multiple peers, each peer referencing their own PeerConfig

resource referenced by name as in line 18.

| |

We then define a CiliumBGPPeerConfig for our single external peer.

Make sure that the name on line 5 matches the referenced name in the cluster configuration.

Since we added password protection to our router BGP configuration,

we also need to reference a Secret containing the password (line 7).

For BGP advertisement to work, we need to define a set of Address Family Identifier (AFI) (line 11) and Subsequent Address Family Idientifier (SAFI) (line 12) which we want to match with a CiliumBGPAdvertisement resource by label (line 15).

| |

We then need to create the Secret referenced by the peer configuration by name (line 5) with a password key

containing the BGP peer password (line 7).

| |

The last BGP resource we need is a CiliumBGPAdvertisement.

We label it with the expected matchLabel from our CiliumBGPPeerConfig resource (line 7) to configure advertisements

for the peers using that configuration.

Next, we define that the LoadBalancerIP (line 13) of Service-type resources (line 10) should be advertised from

Services labelled with the made-up label on line 16.

| |

Note that we can also create advertisements of

both PodCIDR ranges

and Interface IPs,

as well as ClusterIP and ExternalIP addresses for the Service type.

Applying the above BGP resources,

you should now be able to see CiliumBGPNodeConfig in your cluster by running kubectl get cbgpnode

❯ k get cbgpnode

NAME AGE

ctrl-00 1d

ctrl-01 1d

ctrl-02 1dIf you have the Cilium CLI installed you can also run cilium bgp peers

which should show something similar to

❯ cilium bgp peers

Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

ctrl-00 65200 65100 172.20.10.1 established 34s ipv4/unicast 0 0

ctrl-01 65200 65100 172.20.10.1 established 35s ipv4/unicast 0 0

ctrl-02 65200 65100 172.20.10.1 established 35s ipv4/unicast 0 0 where we want the Session State to say established

— if not, go to the Troubleshooting section.

For our LoadBalancer Services to automatically be assigned IP addresses we can create a CiliumLoadBalancerIPPool

to hand out IPs in our chosen CIDR range (line 8).

We then make up a label (line 11) we can apply to Services we want to be assigned IPs from the pool.

| |

Smoke Test#

As a quick smoke test we can create a bog-standard Pod and a Service of type LoadBalancer which picks it up.

Here we’re using Traefik’s whoami image (line 12), a simple Go-server that shows HTTP requests

| |

We label the Service with the labels we conjured earlier.

The one on line 8 to assign it a LoadBalancerIP from the pool defined earlier,

and the one on line 9 to have it advertised by the configured BGP peers.

We also annotate the Service with a special annotation to give it a nice IP address which happens to be in the defined range (line 11).

| |

Take note that we’re also using externalTrafficPolicy: Local (line 14) which should preserve the client source IP.

If everything went well,

you should now be able to see the available BGP routes cilium bgp routes available ipv4 unicast

Node VRouter Prefix NextHop Age Attrs

ctrl-00 65200 172.20.10.69/32 0.0.0.0 19s [{Origin: i} {Nexthop: 0.0.0.0}] or even actually advertised by running cilium bgp routes advertised ipv4 unicast

Node VRouter Peer Prefix NextHop Age Attrs

ctrl-00 65200 172.20.10.1 172.20.10.100/32 192.168.1.100 23s [{Origin: i} {AsPath: 65200} {Nexthop: 192.168.1.100}] Trying to reach the Pod through the Service LoadBalancerIP with e.g. curl 172.20.10.69,

we should now get

Hostname: whoami

IP: 127.0.0.1

IP: ::1

IP: 10.244.1.160

IP: fe80::98ac:29ff:fef8:701f

RemoteAddr: 10.144.12.11:57610

GET / HTTP/1.1

Host: 172.20.10.69

User-Agent: curl/8.7.1

Accept: */*Observe that the RemoteAddr field shows an IP in the 10.144.12.0/24 subnet

— our client subnet from where the request was sent, preserving the client source IP.

A caveat of using externalTrafficPolicy: Local is that nodes only route traffic to Pods that are running on it.

If we force the Pod to run on a non-control-plane node

— which does not match any CiliumBGPClusterConfig resources,

by adding e.g.

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: DoesNotExistand re-creating the Pod, we can no longer reach it since the node it runs on is not part of the BGP peer-group.

Removing externalTrafficPolicy: Local

— or setting it to the default Cluster value,

we can again reach the Pod through the Service LoadBalancerIP.

Notice, however, that the Pod is now advertised by all three control-plane nodes,

❯ cilium bgp routes advertised ipv4 unicast

Node VRouter Peer Prefix NextHop Age Attrs

ctrl-00 65200 172.20.10.1 172.20.10.69/32 192.168.1.100 2s [{Origin: i} {AsPath: 65200} {Nexthop: 192.168.1.100}]

ctrl-01 65200 172.20.10.1 172.20.10.69/32 192.168.1.101 2s [{Origin: i} {AsPath: 65200} {Nexthop: 192.168.1.101}]

ctrl-02 65200 172.20.10.1 172.20.10.69/32 192.168.1.102 2s [{Origin: i} {AsPath: 65200} {Nexthop: 192.168.1.102}]and that when trying to curl it,

the RemoteAddr field now shows an IP from the default Talos PodCIDR 10.244.0.0/16 subnet.

Hostname: whoami

IP: 10.244.3.106

RemoteAddr: 10.244.1.112:57836BGP Troubleshooting#

I didn’t experience any issues from the Cilium side of BGP peering, though I did experience some difficulties from the UniFi side with the BGP configuration not being properly applied.

Troubleshooting BGP issues from the Cilium side mainly consists of running either cilium bgp peers or

cilium bgp routes, as well as checking the status field of the different Cilium BGP CRDs.

For troubleshooting BGP issues from the UniFi side, you first need SSH access to the router. This can be enabled by following the instructions from the Ubiquiti Help Center.

After getting root access,

the first thing to check is the frr service status.

root@Cloud-Gateway-Max:~# systemctl status frrand possibly try to restart it

root@Cloud-Gateway-Max:~# service frr restartWhat helped me the most was checking the applied BGP config by firing off vtysh -c "show running-config"

root@Cloud-Gateway-Max:~# vtysh -c "show running-config"

Building configuration...

Current configuration:

!

frr version 10.1.2

frr defaults traditional

hostname Cloud-Gateway-Max

domainname localdomain

allow-external-route-update

service integrated-vtysh-config

!

ip prefix-list HOMELAB-IN seq 10 permit 172.20.10.0/24 le 32

ip prefix-list HOMELAB-OUT seq 10 permit 192.168.1.0/24

!

router bgp 65100

bgp router-id 192.168.1.1

bgp bestpath as-path multipath-relax

neighbor HOMELAB peer-group

neighbor HOMELAB remote-as 65200

neighbor HOMELAB password hunter2

neighbor 192.168.1.100 peer-group HOMELAB

neighbor 192.168.1.101 peer-group HOMELAB

neighbor 192.168.1.102 peer-group HOMELAB

!

address-family ipv4 unicast

redistribute connected

neighbor HOMELAB next-hop-self

neighbor HOMELAB soft-reconfiguration inbound

neighbor HOMELAB route-map RM-HOMELAB-IN in

neighbor HOMELAB route-map RM-HOMELAB-OUT out

maximum-paths 3

exit-address-family

exit

!

route-map RM-HOMELAB-IN permit 10

match ip address prefix-list HOMELAB-IN

exit

!

route-map RM-HOMELAB-OUT permit 10

match ip address prefix-list HOMELAB-OUT

exit

!

endIn a non-working state I noticed that the ip prefix-list entries weren’t being applied if I put them before

the router bgp configuration,

even though they’re listed above in the Current configuration output,

which annoys me to no end!

Other helpful vtysh commands are show ip bgp, show ip bgp summary, and show ip bgp neighbors

— either execute them as one-off commands with vtysh -c "<command>",

or enter a vtysh session to run them interactively.

If everything is working correctly,

show ip bgp should list all advertised routes, e.g.

root@Cloud-Gateway-Max:~# vtysh -c "show ip bgp"

BGP table version is 18, local router ID is 192.168.1.1, vrf id 0

Default local pref 100, local AS 65100

Status codes: s suppressed, d damped, h history, u unsorted, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 172.20.10.69/32 192.168.1.102 0 65200 i

*= 192.168.1.100 0 65200 i

*= 192.168.1.101 0 65200 iFor a quick peer summary, run ip bgp summary, e.g.

frr# show ip bgp summary

IPv4 Unicast Summary:

BGP router identifier 192.168.1.1, local AS number 65100 VRF default vrf-id 0

BGP table version 51

RIB entries 28, using 3584 bytes of memory

Peers 3, using 71 KiB of memory

Peer groups 1, using 64 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

192.168.1.100 4 65200 5772 5703 51 0 0 1d23h29m 8 1 N/A

192.168.1.101 4 65200 5760 5703 51 0 0 1d23h29m 5 1 N/A

192.168.1.102 4 65200 5754 5703 51 0 0 1d23h29m 3 1 N/A

Total number of neighbors 3To get more details, run show ip bgp neighbors.

This should show detailed information about the BGP neighbours.

I found it helpful to run show ip bgp neighbors <neighbor-ip> <received-routes|filtered-routes|routes>

to show all received-routes, any filtered-routes, and currently active routes from a specific neighbour.

Lastly, show route-map and show ip prefix-list can be useful for troubleshooting BGP route-maps and prefix-lists.

Summary#

All files presented in this article can be found in the resources folder of the article. Where applicable, they are written for use with Argo CD with Kustomize + Helm, but should be easily adaptable for other approaches using e.g. Flux CD.

UniFi#

Upload to UniFi router

router bgp 65100

! Router ID

bgp router-id 192.168.1.1

! No policies needed for eBGP neighbors

no bgp ebgp-requires-policy

! Create peer-group HOMELAB with remote ASN 65200

neighbor HOMELAB peer-group

neighbor HOMELAB remote-as 65200

! Add controller node IPs as neighbors

neighbor 192.168.1.100 peer-group HOMELAB

neighbor 192.168.1.101 peer-group HOMELAB

neighbor 192.168.1.102 peer-group HOMELAB

exitrouter bgp 65100

! Router ID

bgp router-id 192.168.1.1

! Require explicit policy for eBGP neighbors

bgp ebgp-requires-policy

! Relax autonomous system path matching for multipath routing

bgp bestpath as-path multipath-relax

maximum-paths 3

! Create peer-group HOMELAB with remote ASN 65200

neighbor HOMELAB peer-group

neighbor HOMELAB remote-as 65200

neighbor HOMELAB password hunter2

! Add controller node IPs as neighbors

neighbor 192.168.1.100 peer-group HOMELAB

neighbor 192.168.1.101 peer-group HOMELAB

neighbor 192.168.1.102 peer-group HOMELAB

! Apply policies

address-family ipv4 unicast

neighbor HOMELAB activate

neighbor HOMELAB next-hop-self

neighbor HOMELAB soft-reconfiguration inbound

neighbor HOMELAB route-map ALLOW-ALL in

neighbor HOMELAB route-map ALLOW-ALL out

exit-address-family

exit

! Allow all IPs

route-map ALLOW-ALL permit 10

exitrouter bgp 65100

! Router ID

bgp router-id 192.168.1.1

! Require explicit policy for eBGP neighbors

bgp ebgp-requires-policy

! Relax autonomous system path matching for multipath routing

bgp bestpath as-path multipath-relax

maximum-paths 3

! Create peer-group HOMELAB with remote ASN 65200

neighbor HOMELAB peer-group

neighbor HOMELAB remote-as 65200

neighbor HOMELAB password hunter2

! Add controller node IPs as neighbors

neighbor 192.168.1.100 peer-group HOMELAB

neighbor 192.168.1.101 peer-group HOMELAB

neighbor 192.168.1.102 peer-group HOMELAB

! Apply policies

address-family ipv4 unicast

redistribute connected

neighbor HOMELAB activate

neighbor HOMELAB next-hop-self

neighbor HOMELAB soft-reconfiguration inbound

neighbor HOMELAB route-map RM-HOMELAB-IN in

neighbor HOMELAB route-map RM-HOMELAB-OUT out

exit-address-family

exit

! VIPs within the service IP pool

ip prefix-list HOMELAB-IN seq 10 permit 172.20.10.0/24 le 32

! Accept Service IPs from cluster peers

route-map RM-HOMELAB-IN permit 10

match ip address prefix-list HOMELAB-IN

exit

! Router client network

ip prefix-list HOMELAB-OUT seq 10 permit 192.168.1.0/24

! Broadcast client network for cluster peers

route-map RM-HOMELAB-OUT permit 10

match ip address prefix-list HOMELAB-OUT

exitCilium#

kubectl kustomize --enable-helm ./resources/cilium | kubectl apply -f -# cilium/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- bgp-cluster-config.yaml

- bgp-peer-config.yaml

- bgp-peer-password.yaml

- bgp-advertise-lb-services.yaml

- bgp-ip-pool.yaml

helmCharts:

- name: cilium

repo: https://helm.cilium.io

version: 1.18.3

releaseName: cilium

includeCRDs: true

namespace: kube-system

valuesFile: values.yaml

valuesInline:

kubeProxyReplacement: true

# https://docs.cilium.io/en/latest/operations/performance/tuning/#ebpf-host-routing

bpf:

masquerade: true

# https://docs.cilium.io/en/latest/network/concepts/ipam/

ipam:

mode: kubernetes

externalIPs:

enabled: true# cilium/values.yaml

bgpControlPlane:

enabled: true

secretsNamespace:

name: cilium-secrets# cilium/bgp-cluster-config.yaml

apiVersion: cilium.io/v2

kind: CiliumBGPClusterConfig

metadata:

name: cilium-unifi

spec:

nodeSelector:

matchLabels:

node-role.kubernetes.io/control-plane: ""

bgpInstances:

- name: "65200"

localASN: 65200

peers:

- name: "ucg-max-65100"

peerASN: 65100

peerAddress: 192.168.1.1

peerConfigRef:

name: peer-config# cilium/bgp-peer-config.yaml

apiVersion: cilium.io/v2

kind: CiliumBGPPeerConfig

metadata:

name: peer-config

spec:

authSecretRef: bgp-peer-password

gracefulRestart:

enabled: true

families:

- afi: ipv4

safi: unicast

advertisements:

matchLabels:

bgp.cilium.io/advertise: loadbalancer-services# cilium/bgp-peer-password.yaml

apiVersion: v1

kind: Secret

metadata:

name: bgp-peer-password

namespace: cilium-secrets

stringData:

password: "hunter2"# cilium/bgp-advertise-lb-services.yaml

apiVersion: cilium.io/v2

kind: CiliumBGPAdvertisement

metadata:

name: loadbalancer-services

labels:

bgp.cilium.io/advertise: loadbalancer-services

spec:

advertisements:

- advertisementType: Service

service:

addresses:

- LoadBalancerIP

selector:

matchLabels:

bgp.cilium.io/advertise-service: default# cilium/bgp-ip-pool.yaml

apiVersion: cilium.io/v2

kind: CiliumLoadBalancerIPPool

metadata:

name: default-bgp-ip-pool

spec:

blocks:

- cidr: 172.20.10.0/24

serviceSelector:

matchLabels:

bgp.cilium.io/ip-pool: defaultWhoami#

kubectl kustomize --enable-helm ./resources/whoami | kubectl apply -f -# whoami/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- ns.yaml

- svc.yaml

- pod.yaml# whoami/ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: bgp-whoami# whoami/pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: whoami

namespace: bgp-whoami

labels:

app: whoami

spec:

containers:

- name: whoami

image: ghcr.io/traefik/whoami:latest

ports:

- name: http

containerPort: 80# whoami/svc.yaml

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: bgp-whoami

labels:

bgp.cilium.io/ip-pool: default

bgp.cilium.io/advertise-service: default

annotations:

io.cilium/lb-ipam-ips: "172.20.10.69"

spec:

type: LoadBalancer

externalTrafficPolicy: Local

selector:

app: whoami

ports:

- name: web

port: 80

targetPort: httpThe first address (172.20.10.0) is reserved to identify the network, the next address (172.20.10.1) is reserved for the gateway, and the last address (172.20.10.255) is reserved for broadcast. By default, UniFi reserves .2–.5 for static assignments — presumably for network infrastructure, giving us a total of 249 usable IPs in the range 172.20.10.6–172.20.10.254. Fiddling with the DHCP range, we should be able to “free” these four addresses if we wanted to. ↩︎